18.12.2024

This year, the level of charitable contributions by Ukrainians for military needs has decreased by 15% to 40%.Based on comments from Ukrainian charitable Foundations, Bloomberg journalists recently wrote about this. In addition to the reasons the journalists listed in their publication, there is another reason they did not mention, and which Molfar has decided to investigate. This cause works from the long-term perspective against a layer of sharp negative messages in the public discussion. It is about attacks on charitable Foundations using media and social networks with the engagement of bot farms and even real opinion leaders to discredit the Foundations.

Since the start of Russian full-scale invasion of Ukraine, many attacks have taken place against Ukrainian charities that fundraise money to supply the army with all the necessary items. Against the background of a general increase in the pessimistic mood of Ukrainians, the Foundations have recently faced more and more attacks on social media and the media, which has led to a decrease in the involvement of citizens in charitable donations to the needs of the Ukrainian army.

Therefore, Molfar OSINT analysts have decided to review all the attacks that took place from February 20, 2022, to October 2024 (and one more in November 2024), which targeted the five largest Ukrainian charities that raise funds for the needs of Ukraine Security and Defense Forces. According to Forbes media, the largest funds are the ones that increase the largest amount of money for the military every year. They are the United24 Platform, Come Back Alive Foundation, Serhiy Prytula Foundation, KSE Foundation, and Petro Poroshenko Foundation + NGO Solidarna Sprava Hromad (Solidarity Cause of Communities).

How much did Ukrainian charitable Foundations collect in donations (voluntary financial contributions) in 2022, 2023, and part of 2024?

Foundation | Type of ownership | Type of Foundation | FYʼ2022, bn, UAH | FYʼ2023, bn, UAH | H1ʼ2024 bn, UAH |

PlatformUnited24 | government | Military, Humanitarian | 8,6 | 12,07 | 5,981 |

NGO | Military | ||||

NGO | Military | 3,9 | 1,002 | ||

NGO | Military, Humanitarian | (~$40 M) | 1,69 M | 0,04 ($1,23 M) | |

Petro Poroshenko Foundation + NGO Solidarna Sprava Hromad | NGO, private | Military, Humanitarian | 4,083 | 1,424 | |

1 -reporting as of May 2024

2 - data from reports on the Foundationʼs FB and Inst.

3 - according to the data as of 27.12.2023 from the consolidated reports of the Petro Poroshenko Foundationn and the NGO Solidarna Sprava Hromad.

4 - according to the data as of 29.06.2024 from the consolidated reports of the Poroshenko

Foundation and the NGO Solidarna Sprava Hromad, since the beginning of the full-scale invasion, they have raised only UAH 5.5 billion, i.e., UAH 1.42 billion in total for H1ʼ2024

So, to investigate who was standing behind the attacks, what types of virtual attacks were launched on social media, and on which platforms, Molfar analysts worked using the algorithms and methodology, which you can read at the end of the article.

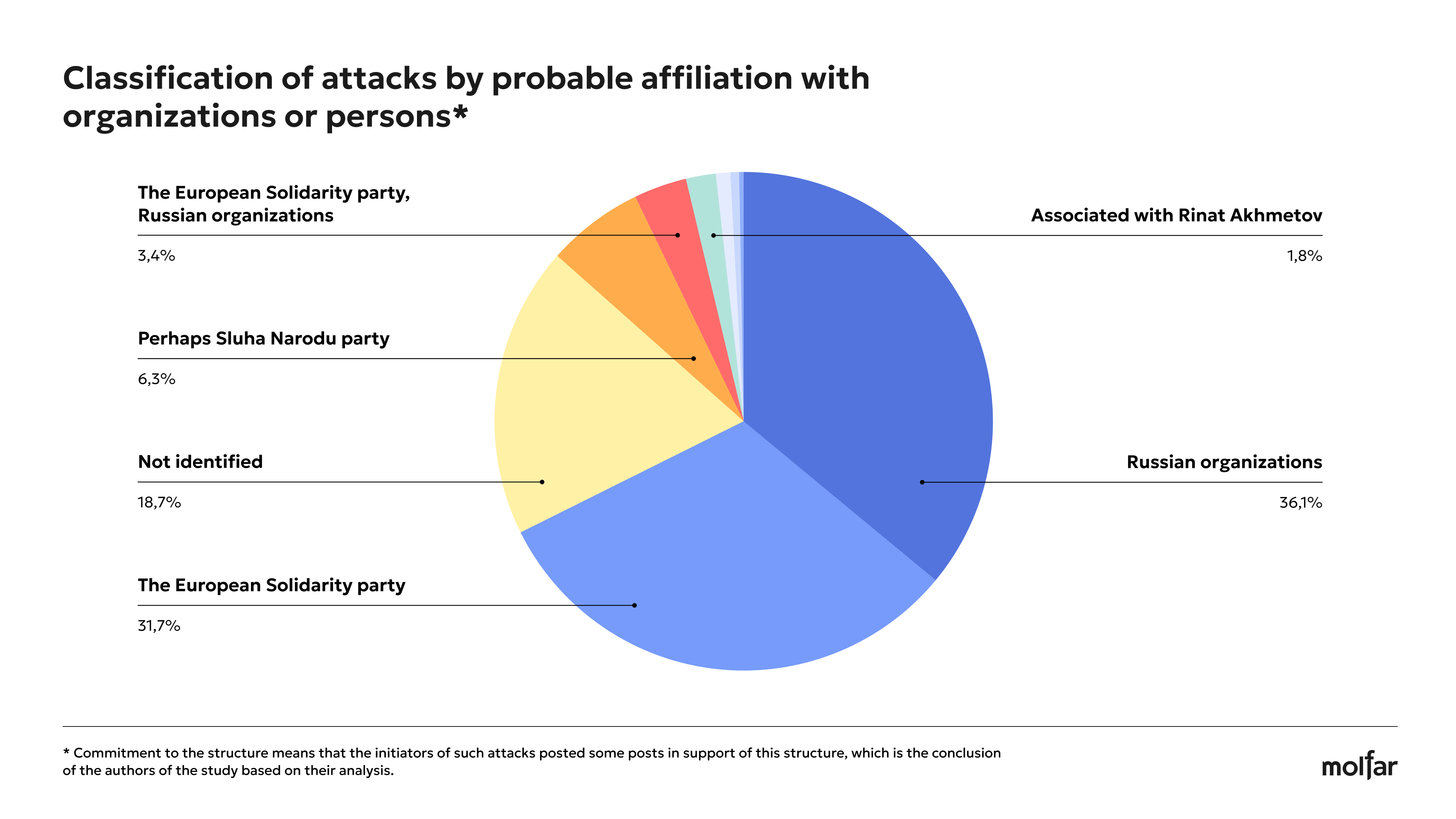

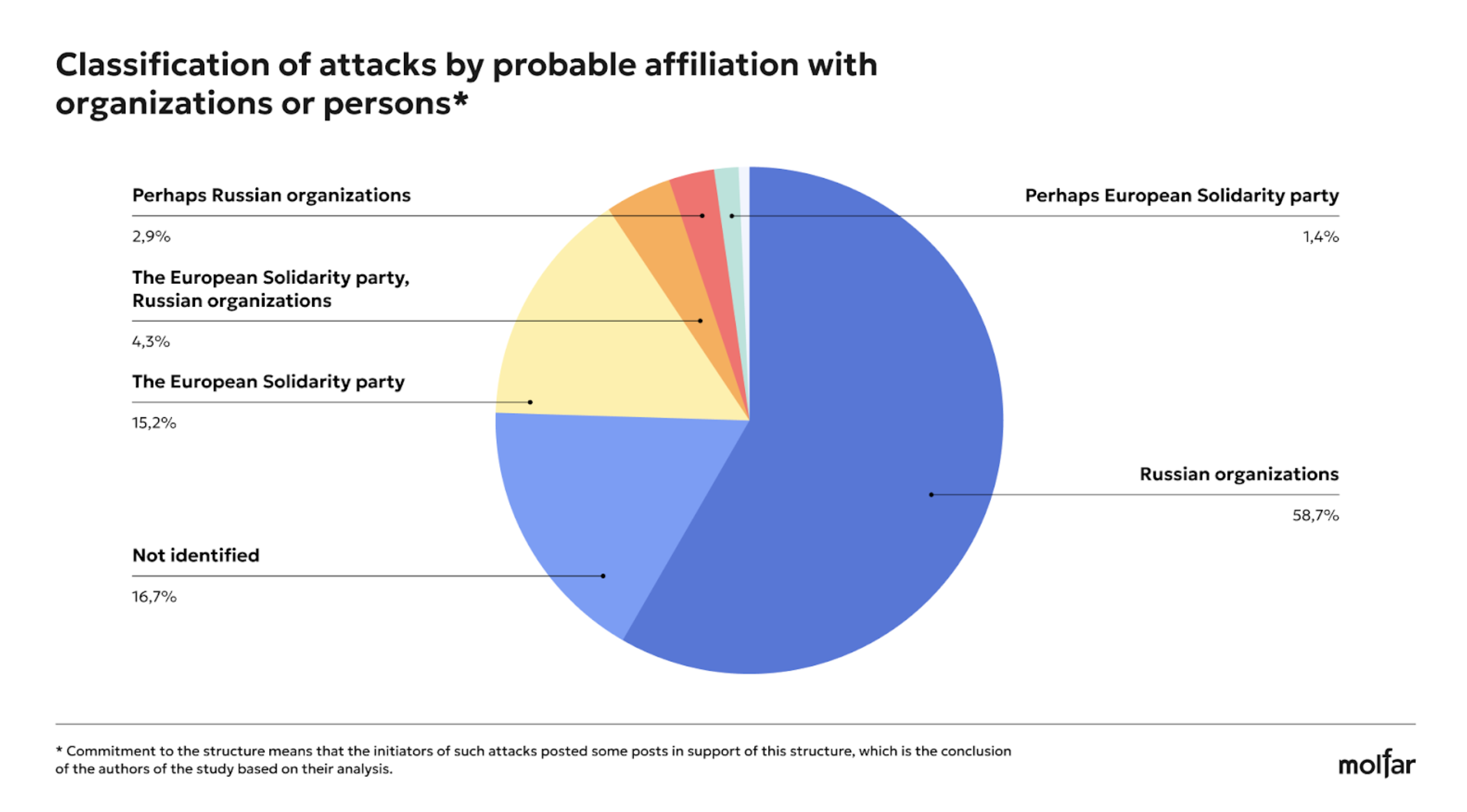

Out of 379 cases of attacks on funds, 36% have a clear Russian trace

During the investigation of the attacks from the beginning of the full-scale invasion to October 2024, Molfar found 379 negative posts and articles involved in attacks on 5 charitable Foundations. The analysts classified all the attacks by the main types of theses for each Foundation. They identified connections between potential supporters of different structures, where possible.

It might seem that 379 attacks targeting the 5 largest funds over the entire analyzed period are not that many, but since Molfar analysts started their analysis in November 2024, it is understood that some of these attacks could be removed by moderators over time. By the way, articles and posts could also be removed by FB or Twitter themselves due to user complaints, so Molfar analysts collected data only from what had already been indexed. Some social networks, such as Facebook, have their own page indexing. Sometimes, when searching for posts on FB, a post may not appear in the general results, but if you use the exact keywords to search for the page of the author, the post will be shown. This may be related to FBʼs internal rules for ranking search queries.

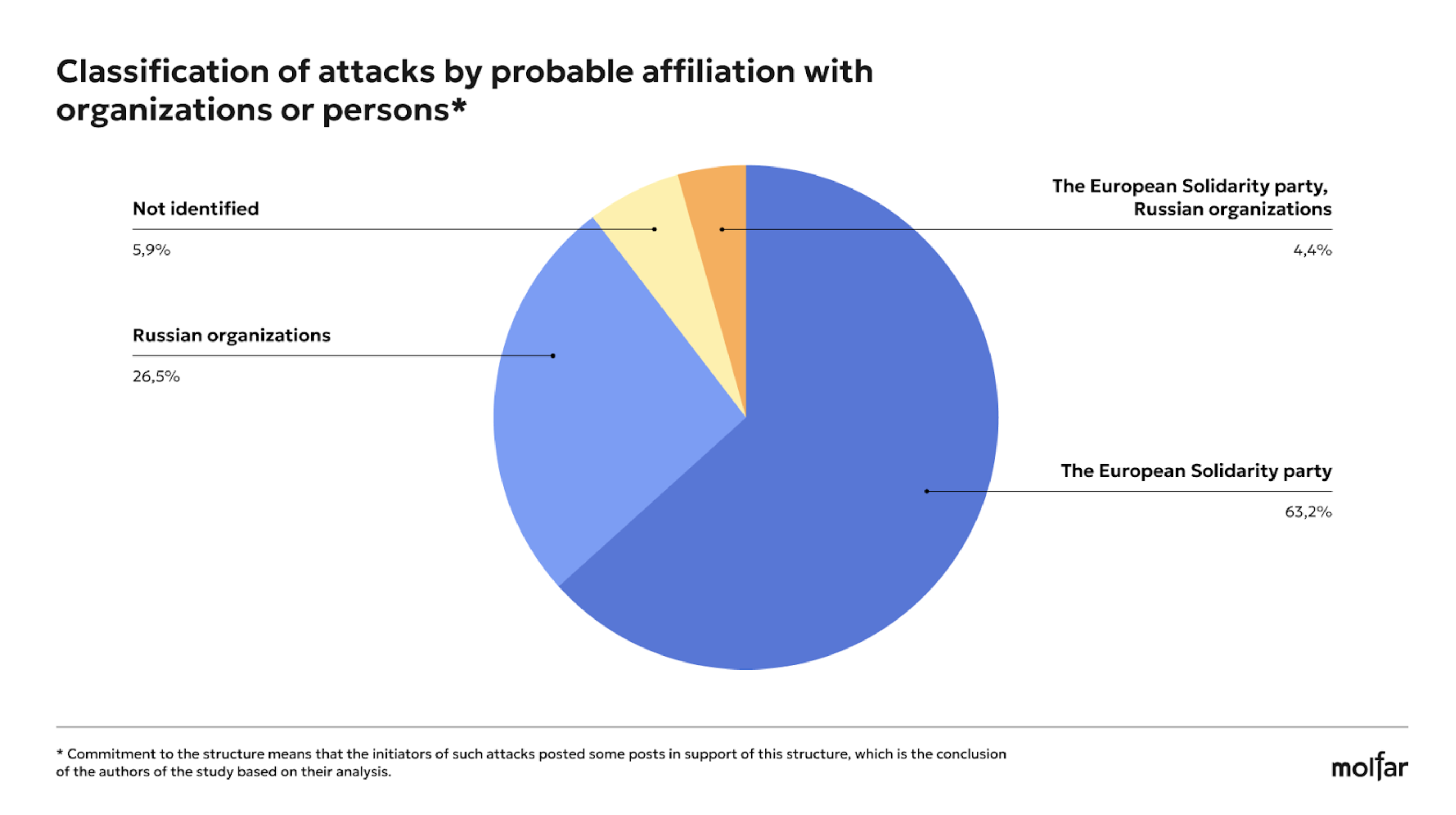

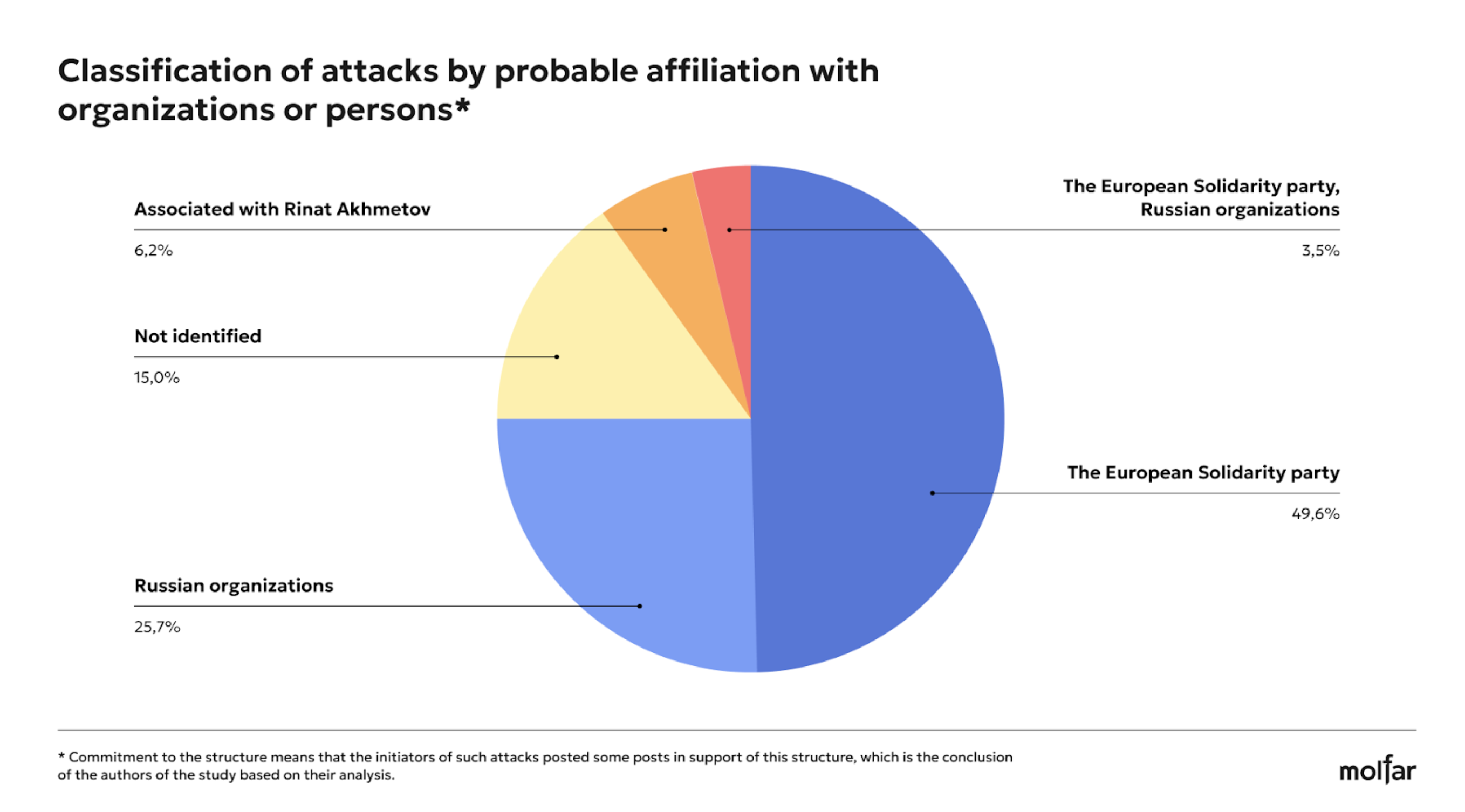

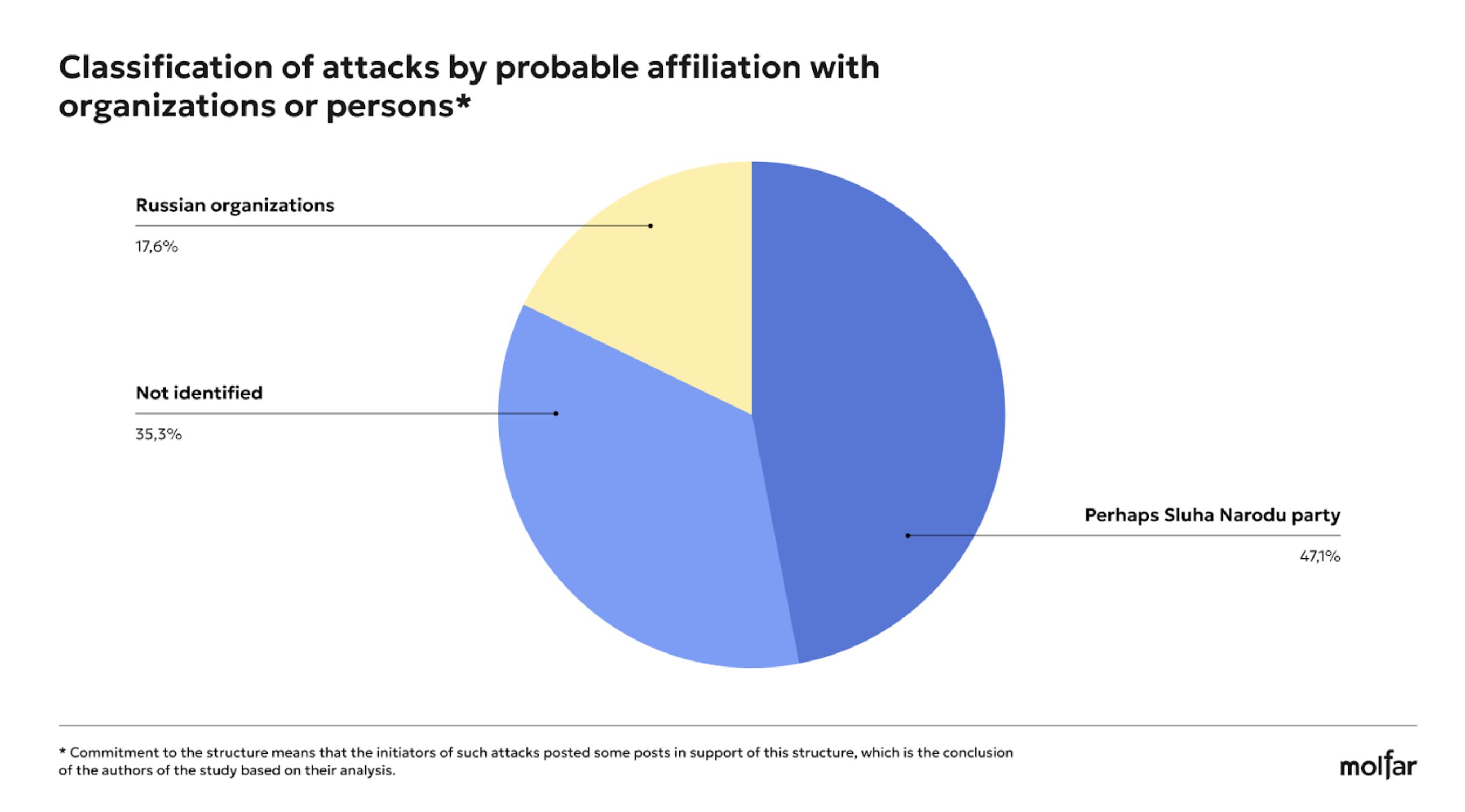

Molfar classified the attacks by their affiliation with the organizations based on the data collected during the research. We emphasize that all affiliations with structures or persons are mentioned with the prefix “probably” and are the authorsʼ opinions. However, in some cases, there is direct evidence of attacks on a particularFoundationn from affiliated structures or persons.

**Commitment to an organization means that the authors of the attacks published some posts supporting that organization, and these are conclusions based exclusively on the author of this analysis. The probable affiliation to a particular entity is indicated based on the availability of sources that confirm a particular affiliation. In some cases, sources indicated an indirect connection to the framework.

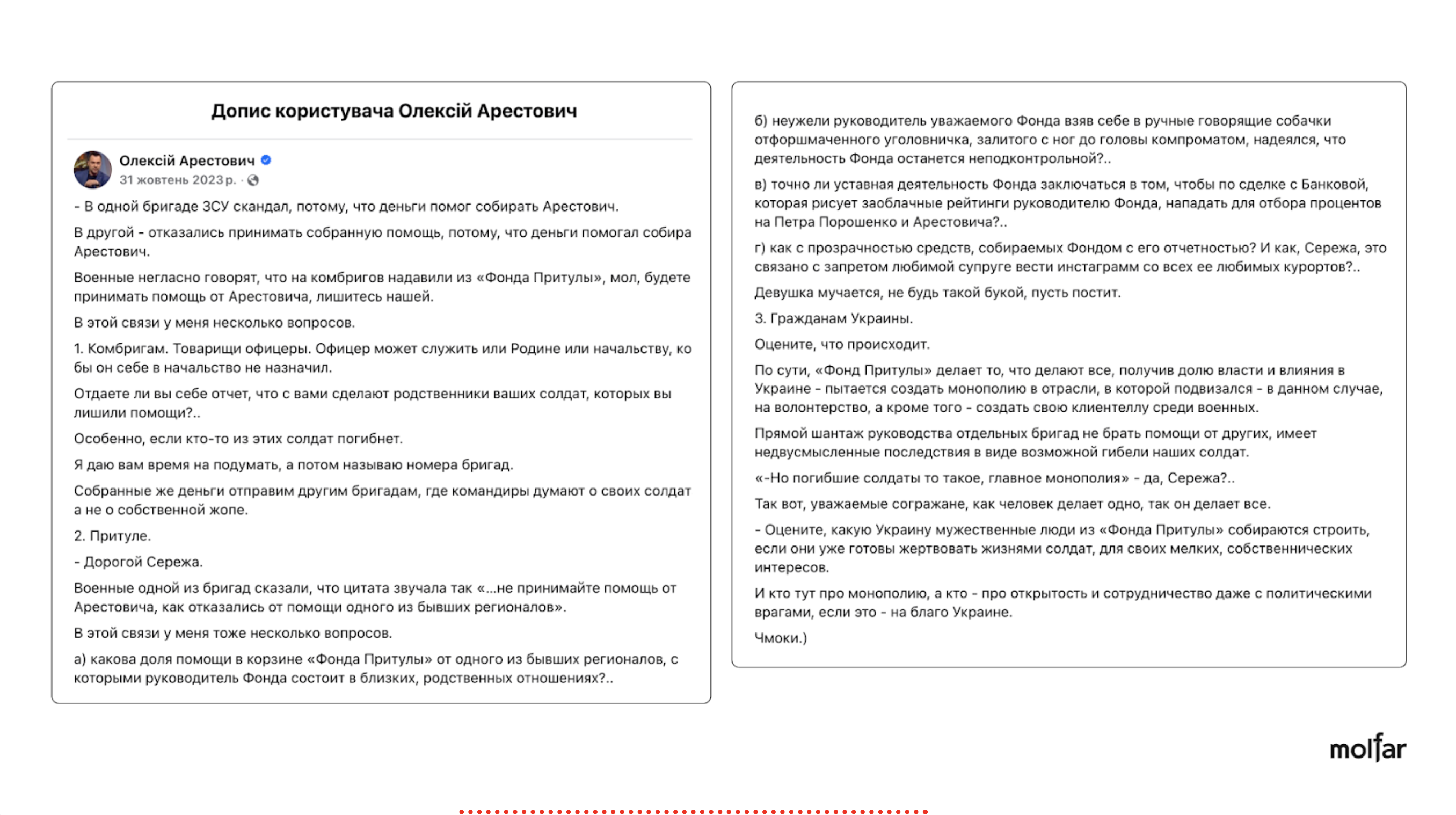

Therefore, as we can see from the analysis, among the possible initiators of the attacks, in addition to Russian structures, there are also probable supporters of certain public figures or teams with which they are associated, including, in particular, supporters of the European Solidarity and Sluha narodu (Servant of the People) parties, and possibly persons who are associated with Rinat Akhmetov and Oleksiy Arestovych. Bihus.info journalists said in their article that the faction of MPs Smart Politics (Rozumna Polityka) and its head, Dmytro Razumkov, lobbied for Rinat Akhmetov interests, Dmytro Razumkovʼs TG-channel was classified as an entity loyal or associated with loyalty to Rinat Akhmetov. As for the case of the mention of Arestovychʼs name, one of those cases was identified on the FB page of Arestovych himself.

Collage made from screenshots of Oleksii Arestovychʼs FB post. It seems this post is an attack on theFoundationʼs reputation. Most likely, the main goal of this attack is the personal promotion of the person, using the conventional narrative of ʼblackmailing the military.ʼ The post was shared by Informator, Українські новини

Collage made from screenshots of Oleksii Arestovychʼs FB post. It seems this post is an attack on theFoundationʼs reputation. Most likely, the main goal of this attack is the personal promotion of the person, using the conventional narrative of ʼblackmailing the military.ʼ The post was shared by Informator, Українські новини

The Russian organizations Molfar identified as potential affiliates of the attacks are a generalization of Russian state structures (FSB, GRU, and others) and Russian propagandists or accounts that support Russia. For example, in 2021, the SBU exposed a network of telegram channels controlled by the Russian GRU. After that, the Kyiv District Court of Kharkiv arrested the intellectual property rights of four Telegram channels: “Легитимный,” “Резидент,” “Картель,” “Сплетница” and ordered to block access to them. However, internet providers cannot implement this blocking.

Probable commitment | Serhiy Prytula Foundation | United24 | Come Back Alive Foundationon | Petro Poroshenko Foundations | KSE Foundation | Total |

Russian organizations | 81 | 29 | 18 | 9 | 0 | 137 |

European Solidarity party | 21 | 56 | 43 | 0 | 0 | 120 |

Not identified | 23 | 17 | 4 | 18 | 9 | 72 |

Probably Sluha Narodu party | 0 | 0 | 0 | 24 | 0 | 24 |

European Solidarity party, Russian organizations | 6 | 4 | 3 | 0 | 0 | 13 |

Associated with Rinat Akhmetov | 0 | 7 | 0 | 0 | 0 | 7 |

Probably, Russian organizations | 4 | 0 | 0 | 0 | 0 | 4 |

Probably, European Solidarity party | 2 | 0 | 0 | 0 | 0 | 2 |

Oleksiy Arestovych | 1 | 0 | 0 | 0 | 0 | 1 |

Total | 138 | 113 | 68 | 51 | 9 | 379 |

If authors of the report could not identify the supporters of organizations, and in cases where the channel/account does not clearly express affiliation with any one organization and does not publish pro-Russian narratives, such attacks were classified as not identified.ʼ Possible allegiance to a particular organization is based on the sufficiency of sources that confirm a particular affiliation. In some cases, sources indicated an indirect connection to an organization.

34 million negative comments were posted by a Russian bot network in just 4 months, using false flag attacks

This spring, the German media Süddeutsche Zeitung, NDR, WDR, and the Estonian online media Delfi investigated the Russian media agency Social Design Agency (SDA) using data leaks. The “agency” creates and manages a whole network of social media bots that spread pro-Russian narratives on behalf of the citizens of countries they are trying to discredit. It seems that SDA directly works for the Russian presidential administration. Also interesting is that the bots not only focus on overtly pro-Russian topics when posting and commenting, but also press on issues that worry the society as a whole: military mobilization, corruption, battlefield loss, restrictions on freedom of speech during martial law, reduced military aid and counteroffensive. Personalities such as the current President of Ukraine, Volodymyr Zelenskyy, compared to the previous one, Petro Poroshenko, the mayor of Kyiv, Vitalii Klitschko, or the former commander-in-chief of the Armed Forces of Ukraine, Valerii Zaluzhniy, are used to stir up controversy on social media. From January to April 2024, SDA bots published almost 34 million negative comments on different social media platforms under the posts of other users.

By the way, the ex-leader of the Wagner PMC, Yevgen Prigozhyn, who is now believed to be dead, has been financing and supervising the Internet Research Agency (also known as the Internet Research Agency (IRA)) since 2014, which has been spreading pro-government and anti-opposition messages online. In particular, the violent annexation of Crimea and the beginning of the war in Donbas were implemented with the media support of Yevgen Prigozhinʼs bots. Molfar wrote about this in an article about the ex-head of Wagner PMC.

Only in the first weeks after the Russian full-scale invasion, Twitter blocked 100 accounts that spread fake news under the hashtag #istandwithputin and other Russian propaganda. As of August 2022, this number increased to 75,000 blocked accounts linked to the spread of Russian propaganda.

In May 2024, the SBU identified 46 bot farms coordinated from Russia, which had a capacity of 3 million accounts on various platforms and could spread narratives to an audience of 12 million people.

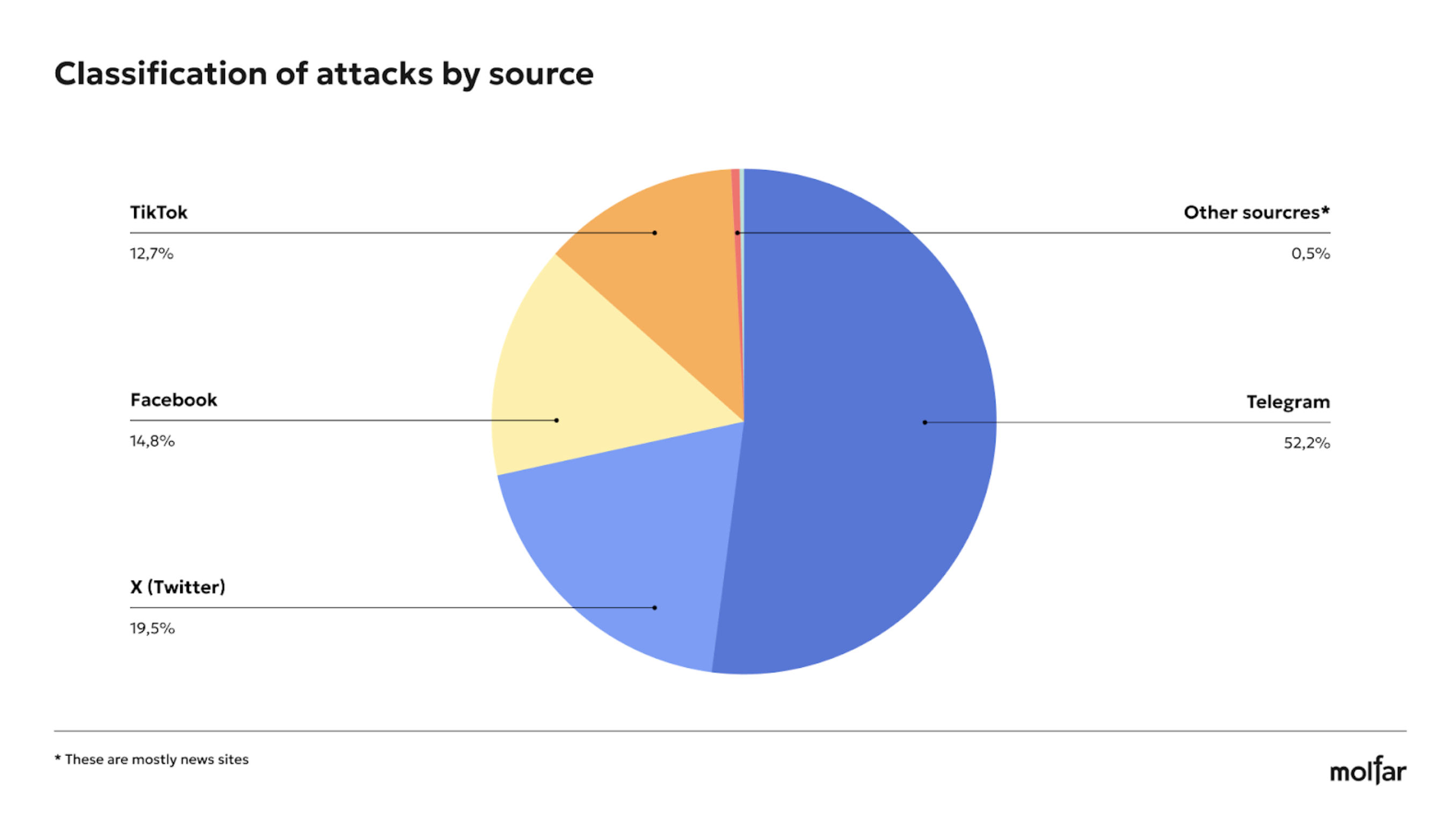

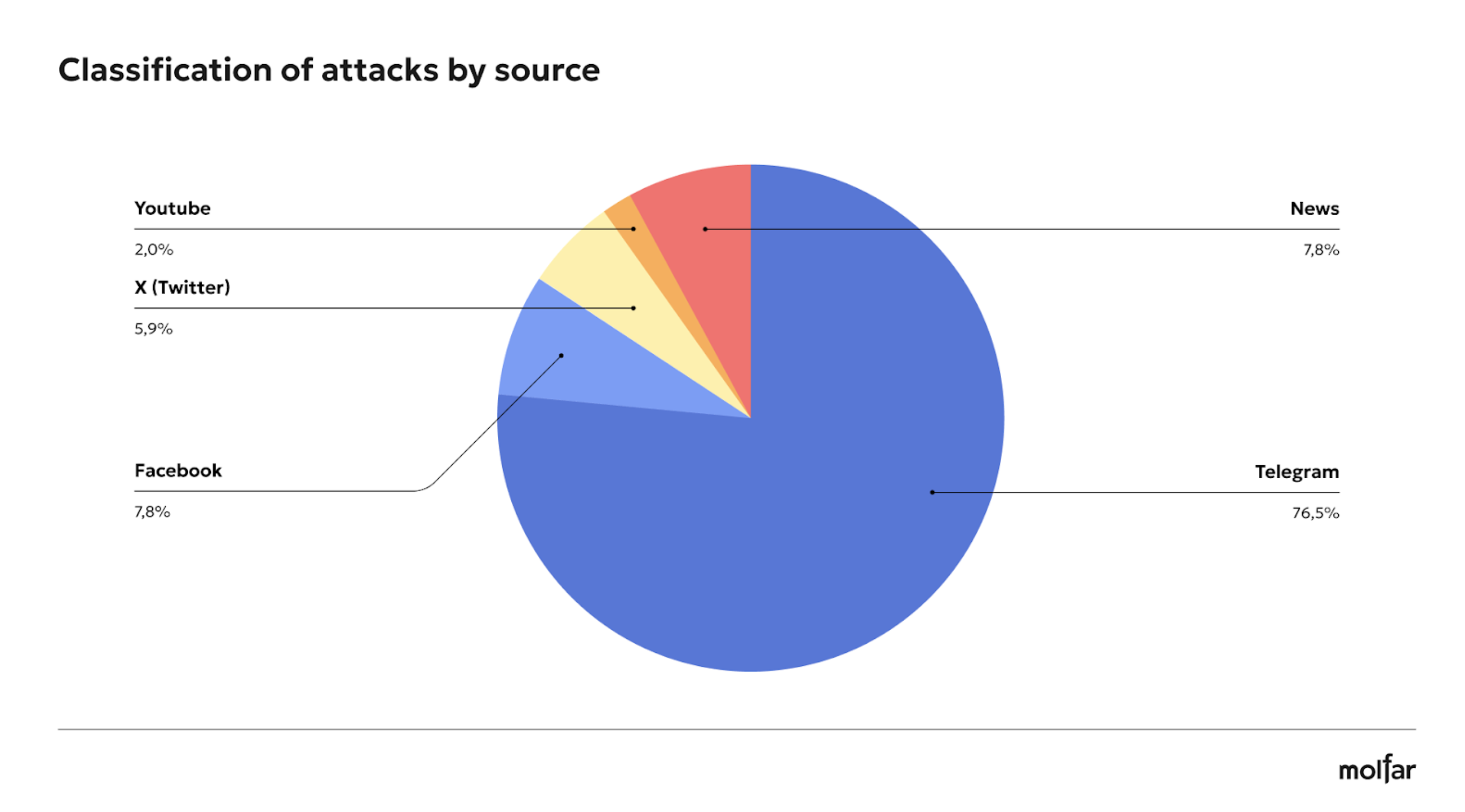

Telegram is the most commonly used platform for attacks. What other platforms are used to spread fake news and disinformation?

Ukrainians continue to use social media to get news. According to the National Institute for Strategic Studies, this share increased from 51% in 2015 to 84% in 2024. Therefore, most of the attacks were launched on social media rather than through traditional media or news sites, because social media is an extremely friendly environment for easy sharing of posts (1, 2, 3, 4, 5). In addition, they have a higher level of audience engagement than classical media can offer. Telegram is perhaps the most relevant platform for attacks, as evidenced by Molfar research results in investigating the sources of attack distribution.

Sources | Serhiy Prytula Foundation | United24 | Come Back Alive Foundation | Petro Poroshenko Foundations | KSE Foundation | Total |

TG | 83 | 53 | 23 | 39 | 0 | 198 |

TW | 11 | 31 | 29 | 3 | 0 | 74 |

FB | 16 | 19 | 13 | 4 | 4 | 57 |

Inst | 0 | 0 | 2 | 0 | 0 | 2 |

TikTok | 1 | 0 | 0 | 0 | 0 | 1 |

Other sources* | 27 | 10 | 1 | 5 | 5 | 48 |

Total | 138 | 113 | 68 | 51 | 9 | 379 |

According to Molfar research, such platforms have been used to attack funds.

*Other sources — mainly news websites

52 % of the attacks took place on Telegram because this messenger is the leading news source for many Ukrainians today (according to USAID, for 72 % of Ukrainian citizens). At the same time, some investigators call Telegram the main network used to spread fake news (1, 2) because, according to media reports (1, 2, 3, 4, 5), this messenger does not apply international standards for the quality and veracity of content published and distributed by channels and users.

Analyzing the possible supporters of the organizations and overlaying the attacks on the platforms where they were realized, we can trace the epicenters of concentration. For example, Russian entities mainly attack Foundations on Telegram.

Commitment** | TG | TW | FB | Inst | TikTok | Other sources | Total |

Russian organizations | 122 | 0 | 6 | 0 | 1 | 8 | 137 |

European Solidarity party | 30 | 33 | 12 | 0 | 0 | 45 | 120 |

Not identified | 15 | 3 | 17 | 2 | 0 | 34 | 72 |

Probably Sluha Narodu party | 21 | 1 | 0 | 0 | 0 | 2 | 24 |

European Solidarity party, Russian organizations | 3 | 5 | 1 | 0 | 0 | 4 | 13 |

Associated with Rinat Akhmetov | 7 | 0 | 0 | 0 | 0 | 0 | 7 |

Probably, Russian organizations | 3 | 0 | 0 | 0 | 0 | 1 | 4 |

Probably, European Solidarity party | 0 | 1 | 0 | 0 | 0 | 1 | 2 |

Oleksiy Arestovych | 0 | 0 | 1 | 0 | 0 | 0 | 1 |

Total | 201 | 43 | 37 | 2 | 1 | 95 | 379 |

* mostly news sites;

** Commitment to an entity means that the authors of the attacks posted some posts supporting that organization. This is the opinion of the authors of the report.

The probable affiliation to a particular entity is indicated based on the availability of sources that confirm a particular affiliation. In some cases, sources indicated an indirect connection to the structure.

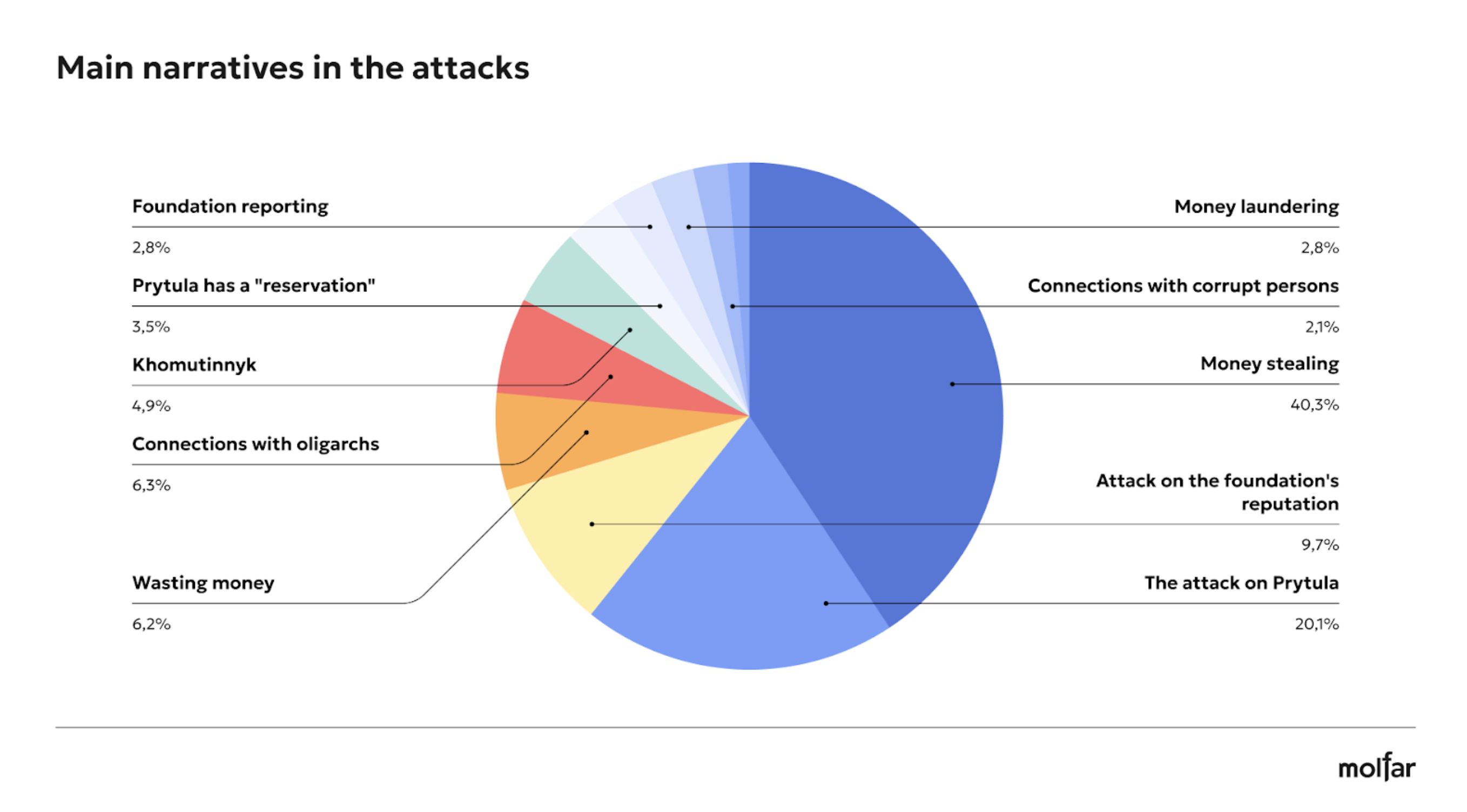

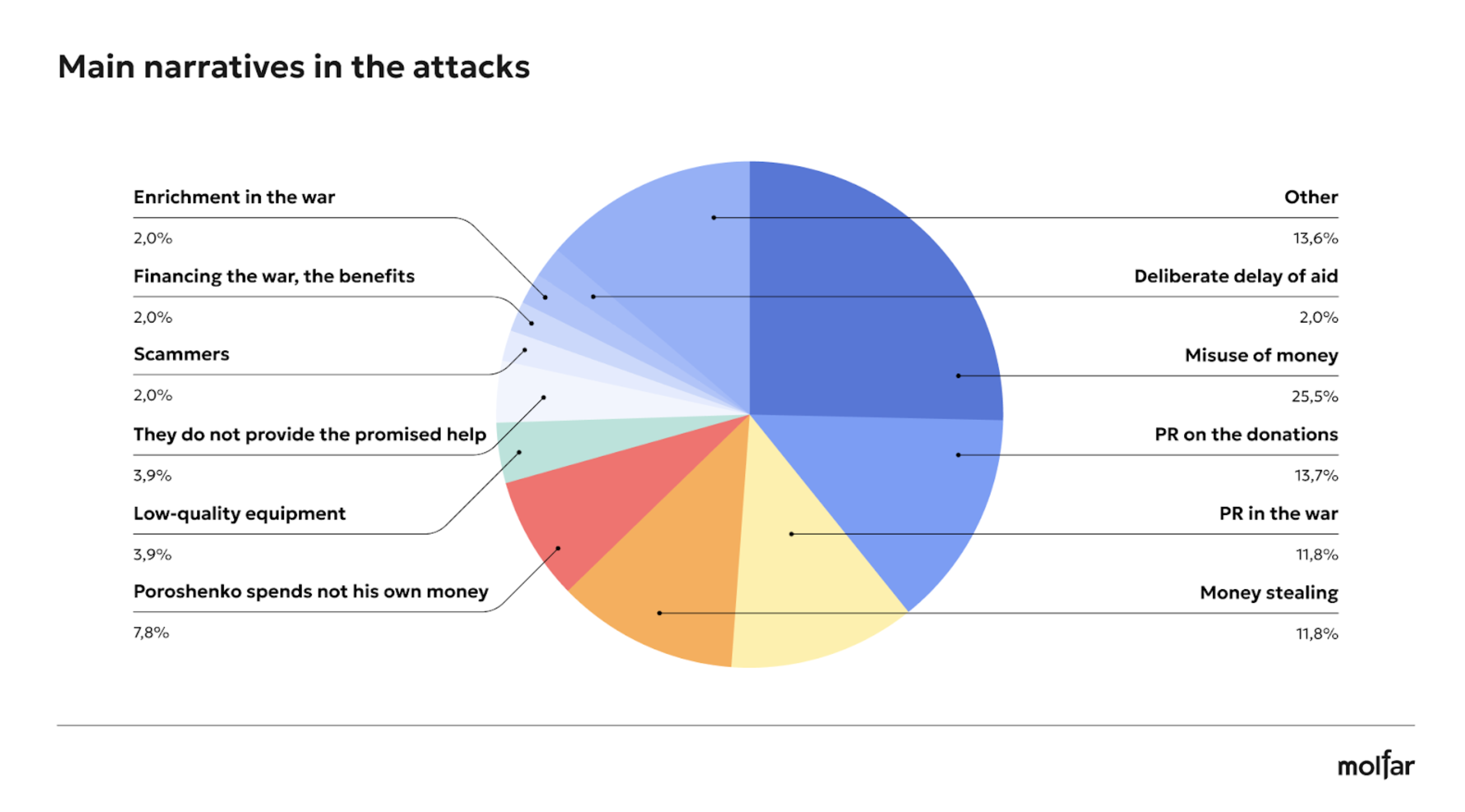

Wastage, money stealing, PR at the war: which narratives are spread in attacks?

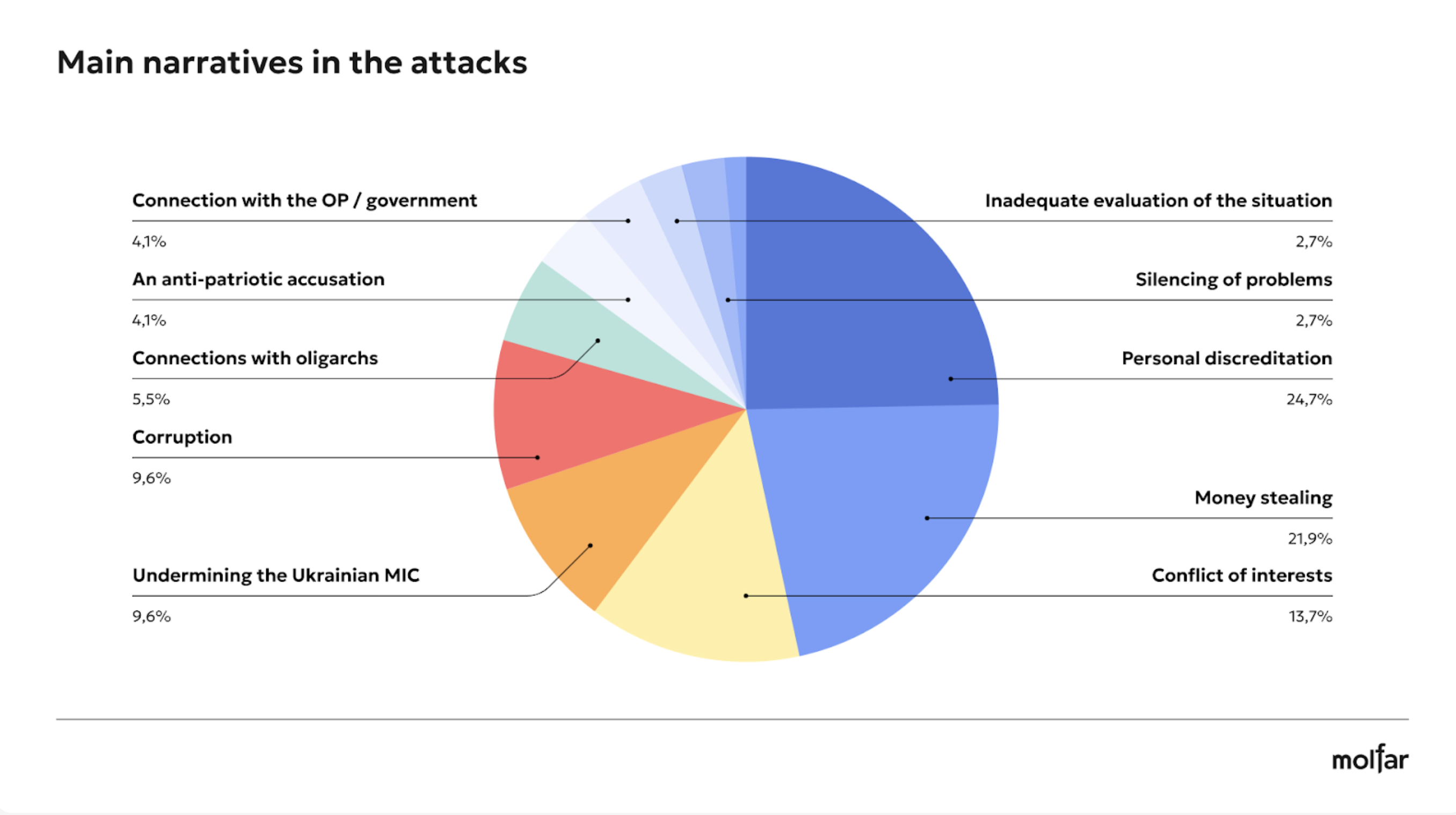

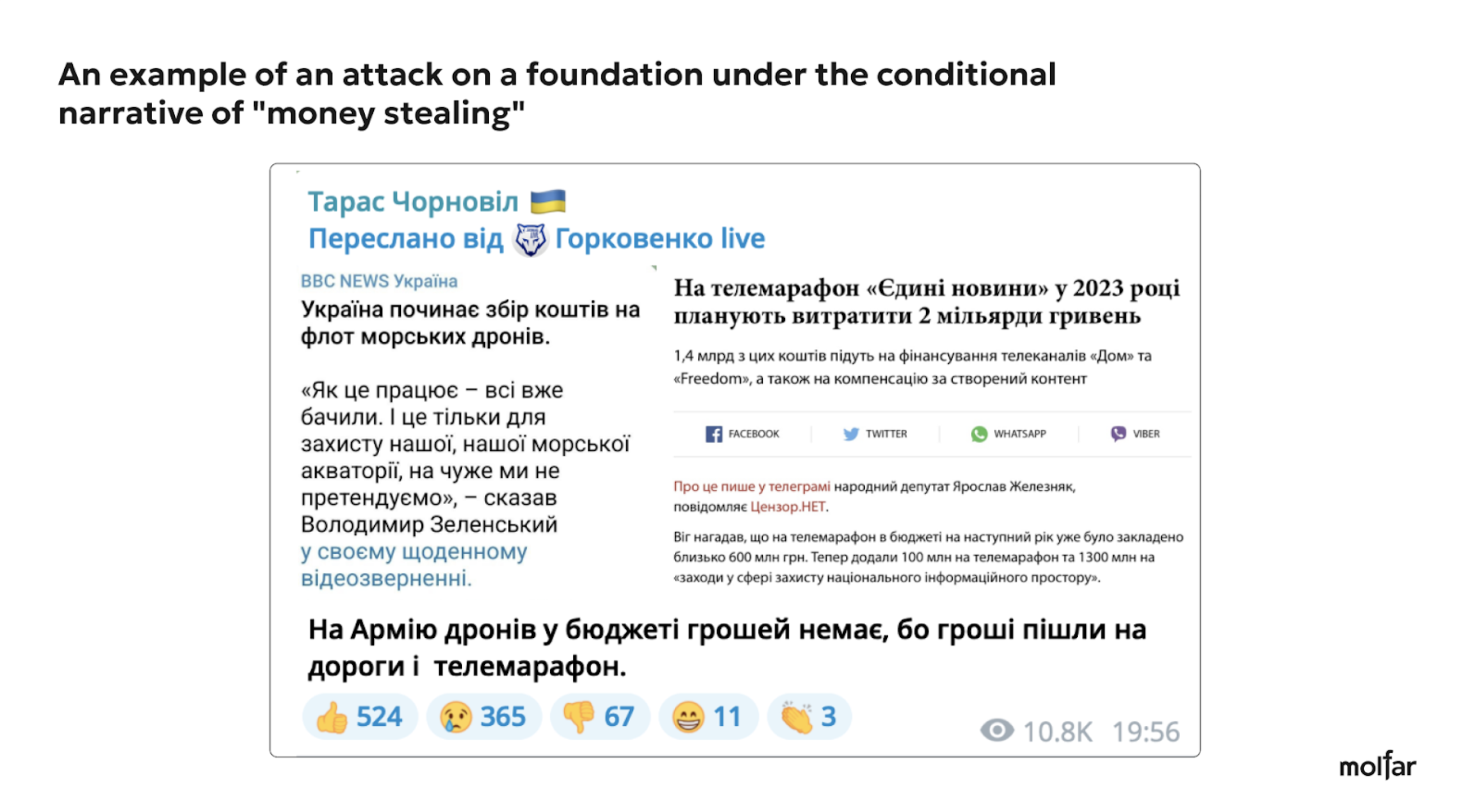

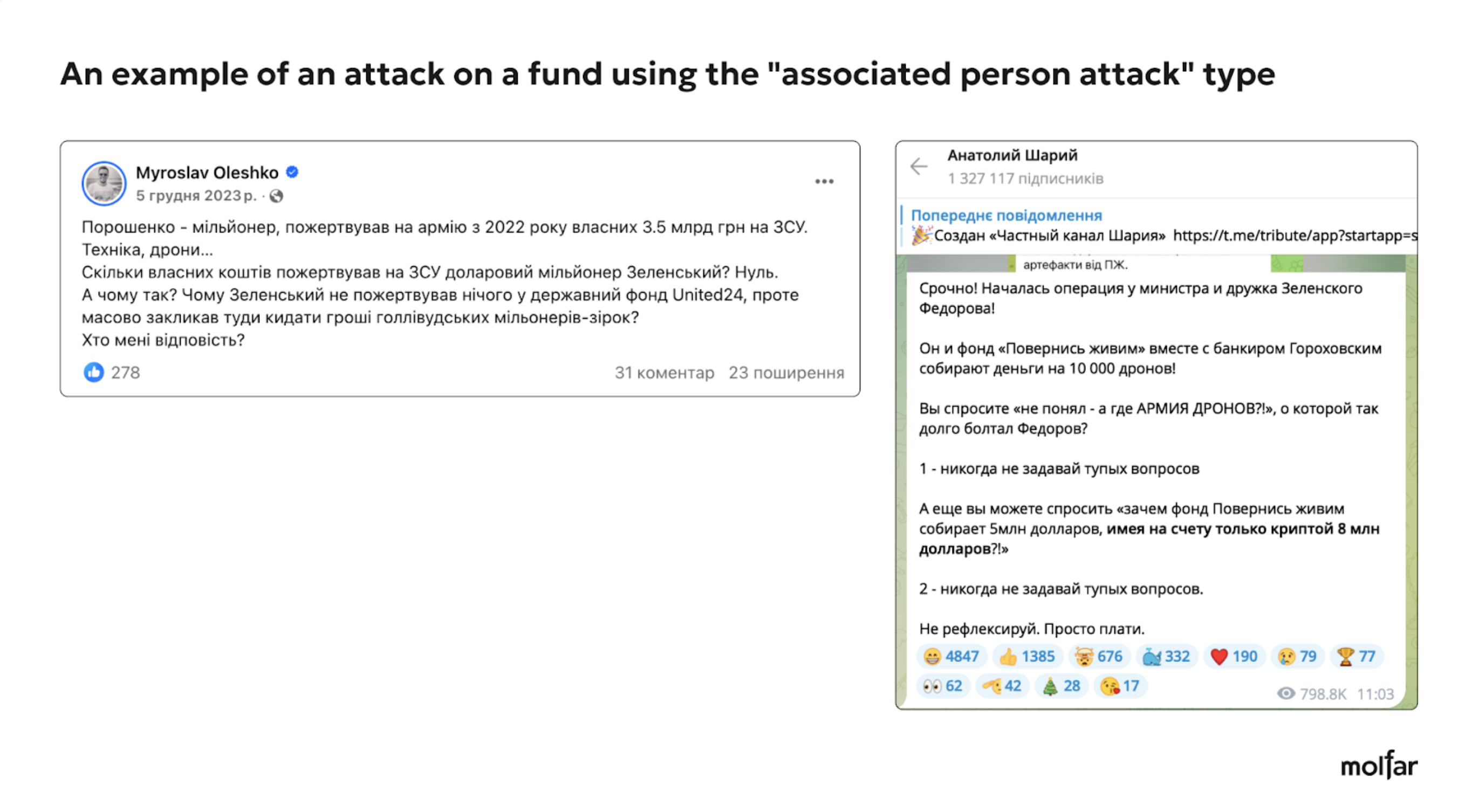

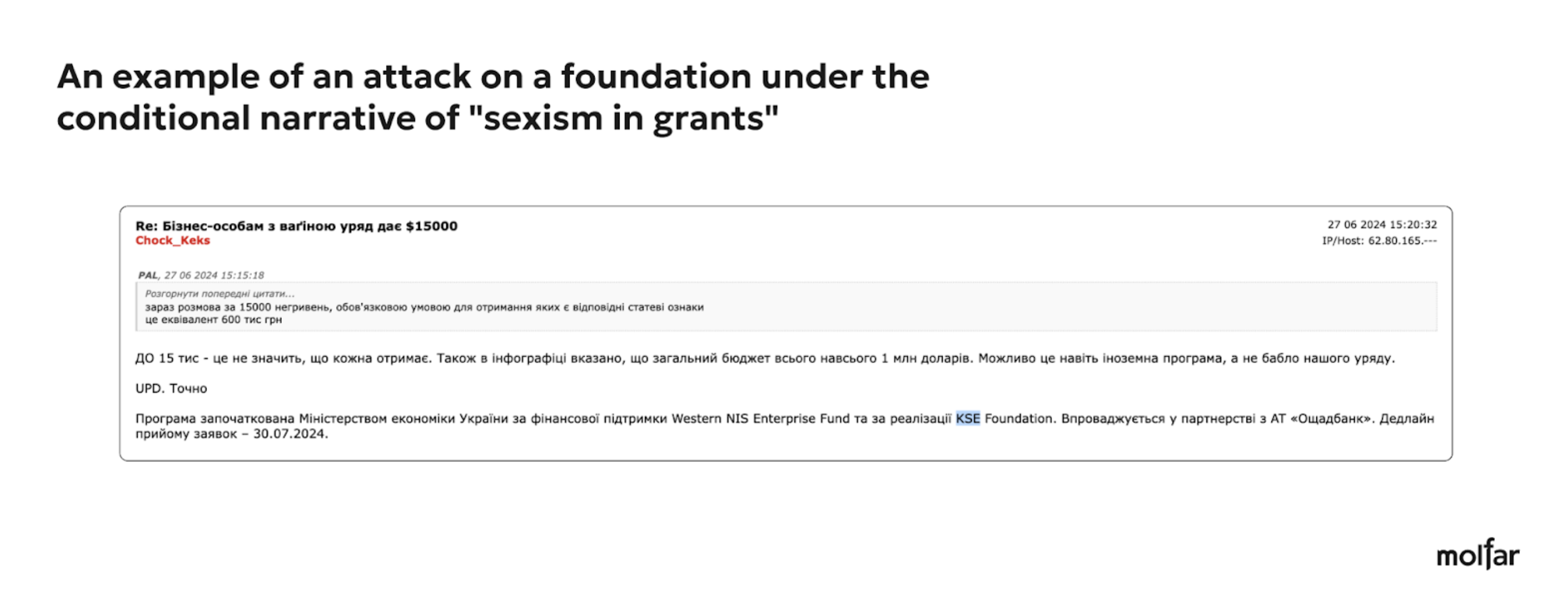

As we mentioned in the article above, attacks to Foundations take place in the context of public concerns, and even openly, Russian attacks sometimes use topics that are the subject of intense public discussion. Especially on the Internet. Molfar concluded that attacks aimed at spreading doubt about the integrity and purpose of charitable funds and similar narratives. And this is the main pattern used against all five analyzed charities:

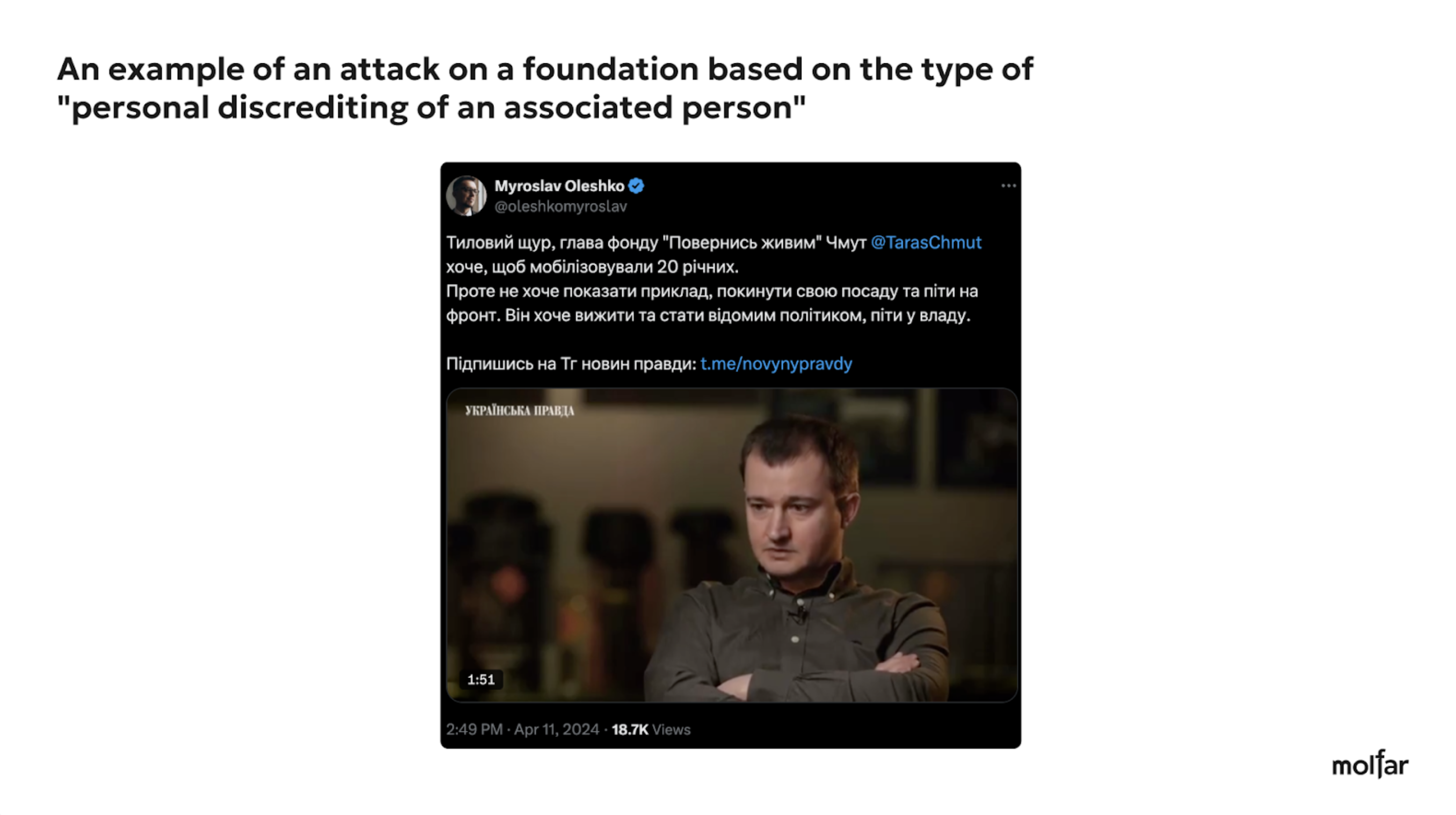

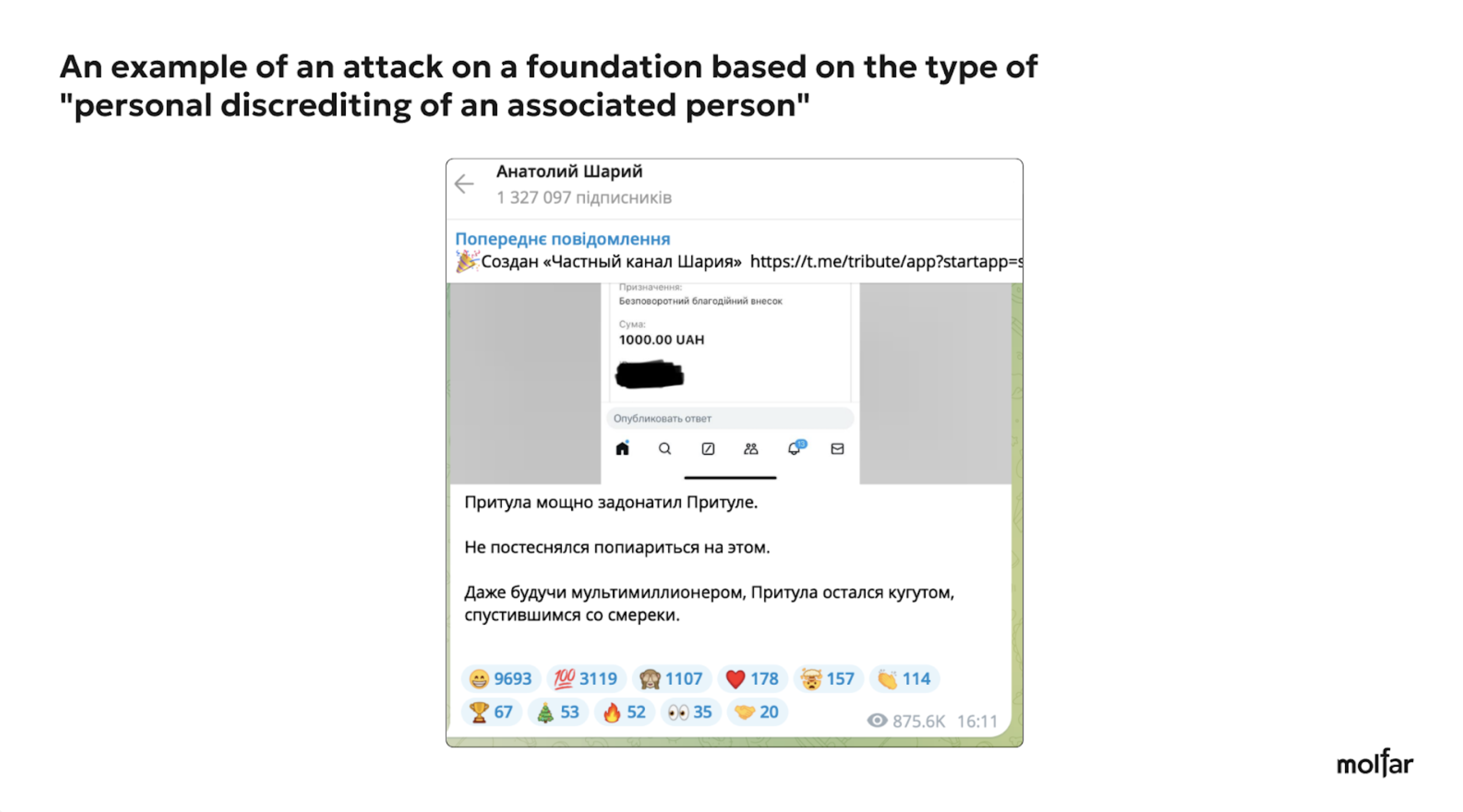

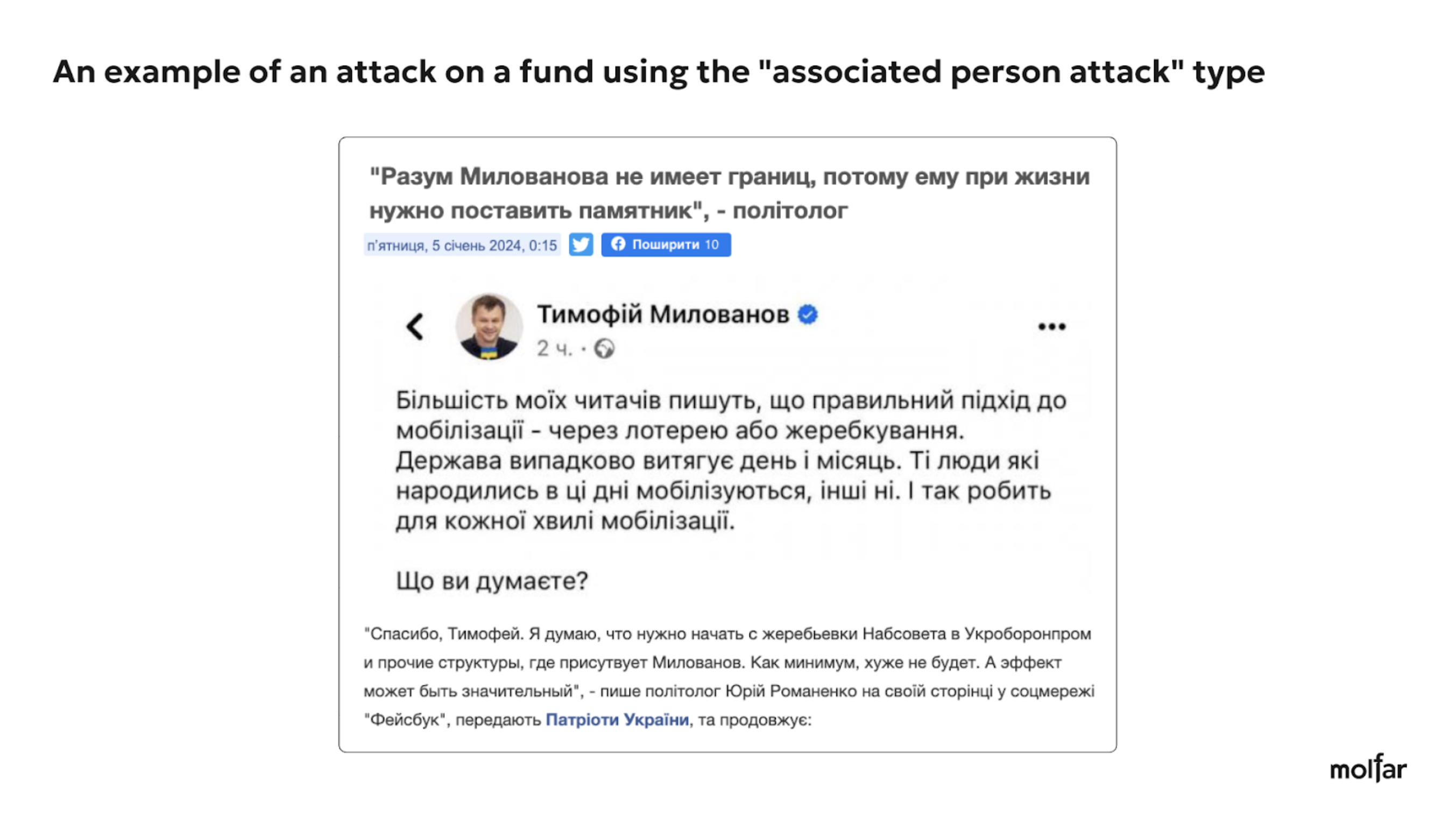

— often, attacks are targeted at individuals associated with the Foundation, either as founders or leaders or associated with the Foundation. These attacks are made to undermine the individual credibility and lower their public profile. These attacks are partly manipulative, using real news stories but with a dash of fake or provocative statements. For example, on the United24 platform Instagram page, the Foundation ambassador, historian Timothy Snyder, was threatened with a “novichok.” Or an attempt to discredit the head of the Come Back Alive Foundation, Taras Chmut, by the fugitive blogger Myroslav Oleshko (who, according to the SBU, is suspected of carrying out information and subversive activities that harm the Armed Forces of Ukraine (Art. 114-1 (obstruction of the lawful activities of the Armed Forces of Ukraine and other military formations) and under Part 4 of Article 358 (use of a knowingly forged document), calling Chmut a “rear rat” with political ambitions (without mentioning in the post that Taras Chmut had served as a marine);

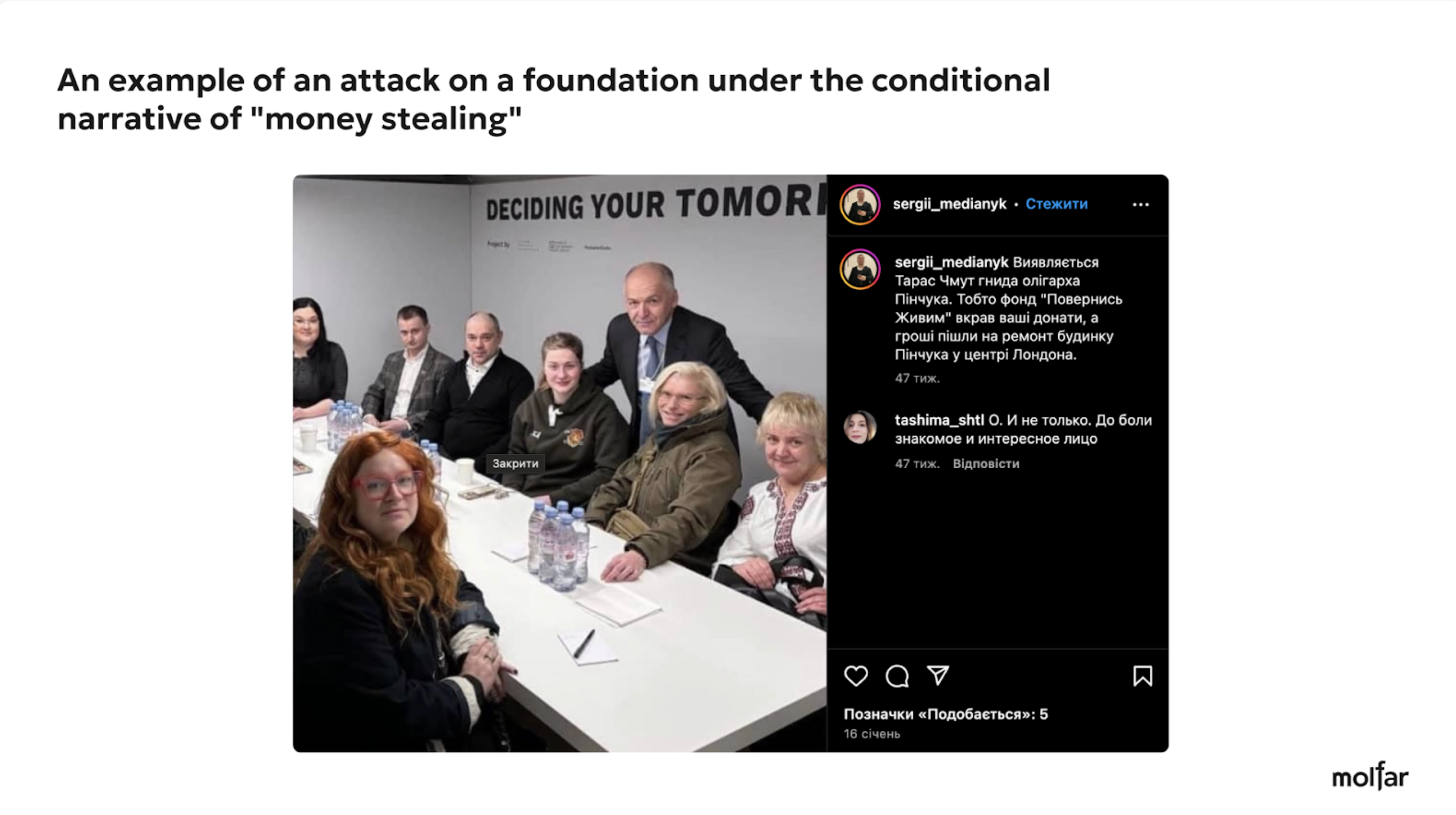

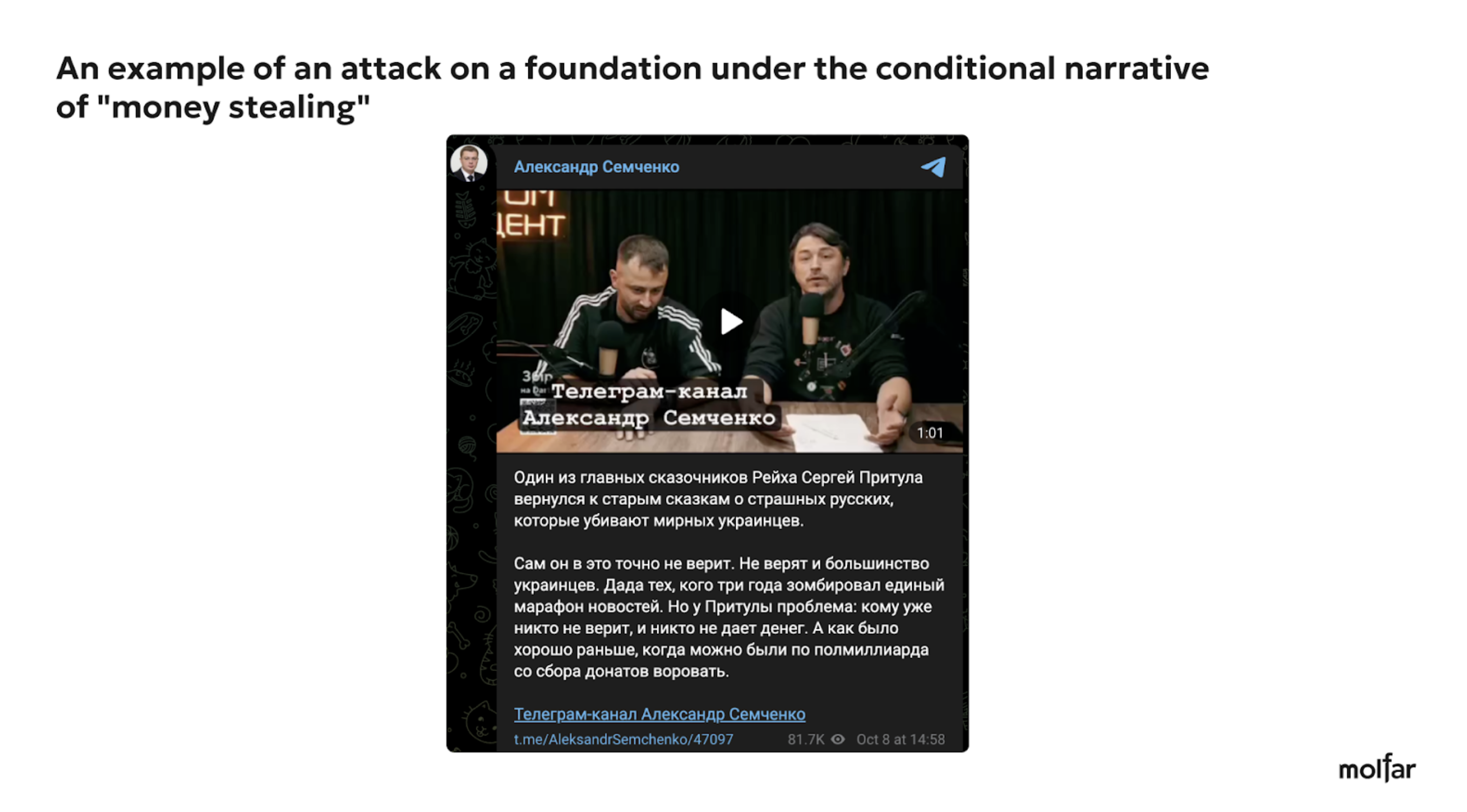

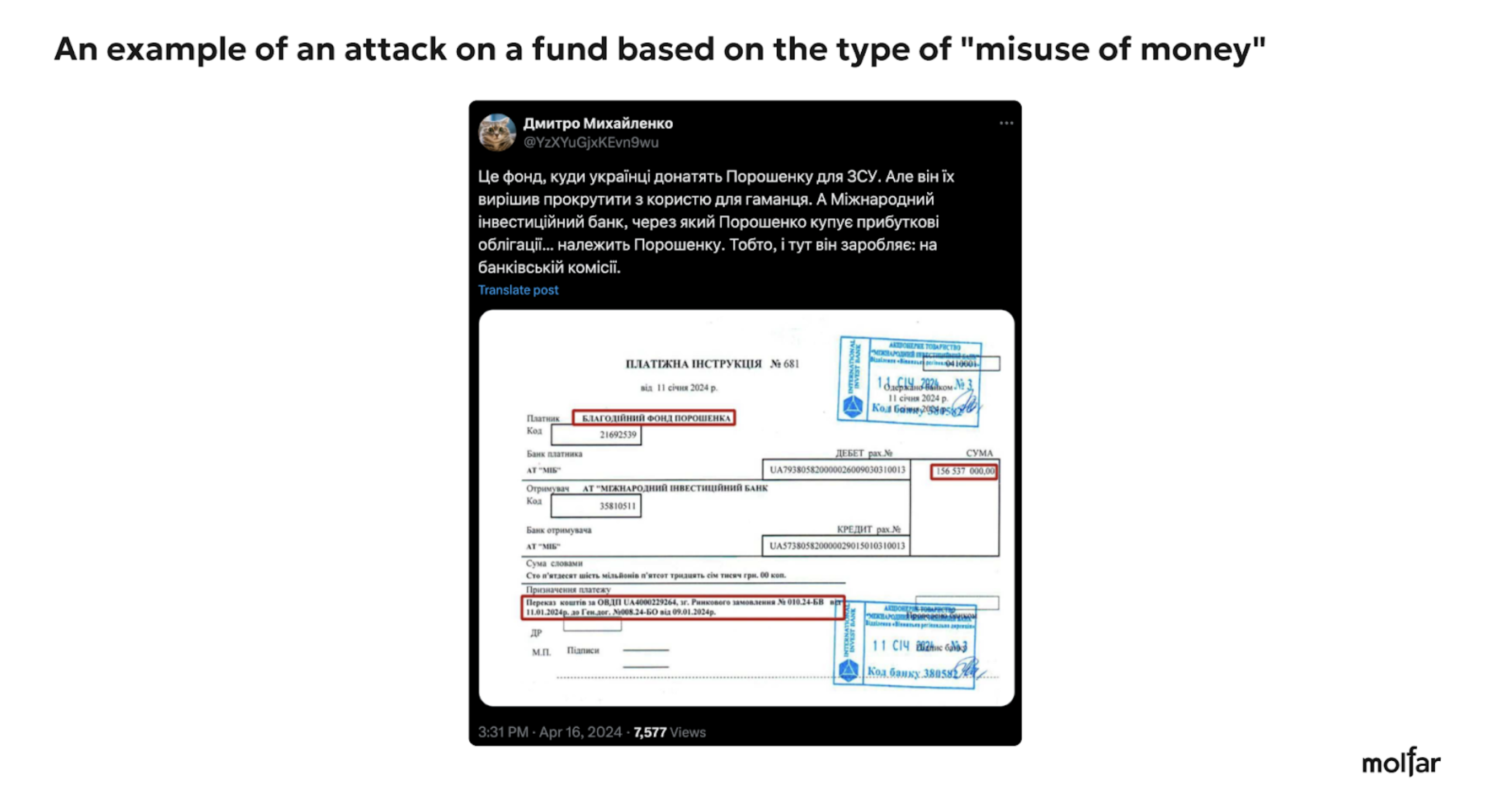

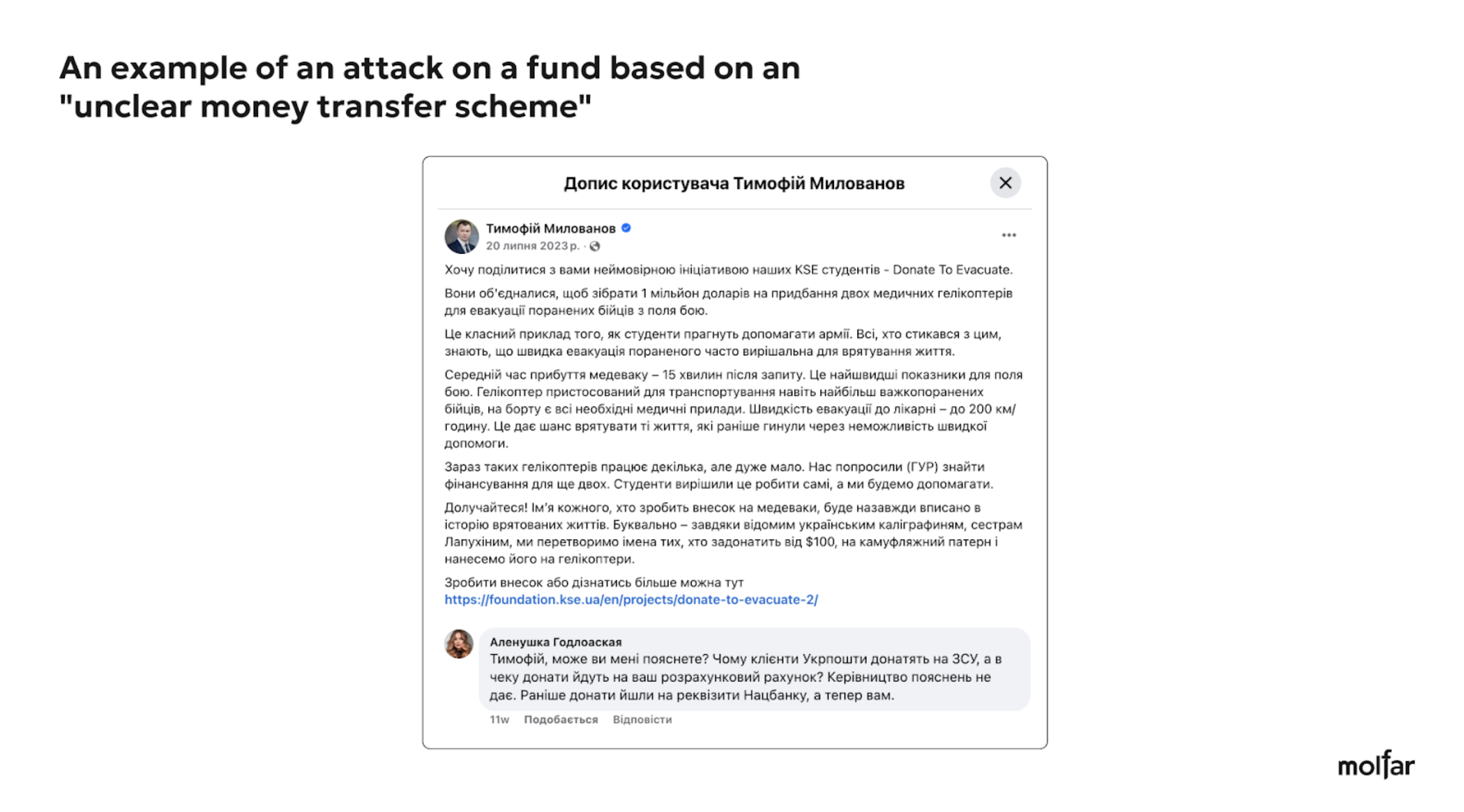

— embezzlement or misuse of the money. For example, the comments were addressed to the Serhiy PrytuFoundation, asking, ʼWhere is the satellite?ʼ. After all, this fundraiser campaign was once highly visible in the information field. Or an attack on the KSE Foundation about the non-transparency of the transfer scheme, addressing Tymofiy Mylovanov, who is associated with the Foundation;

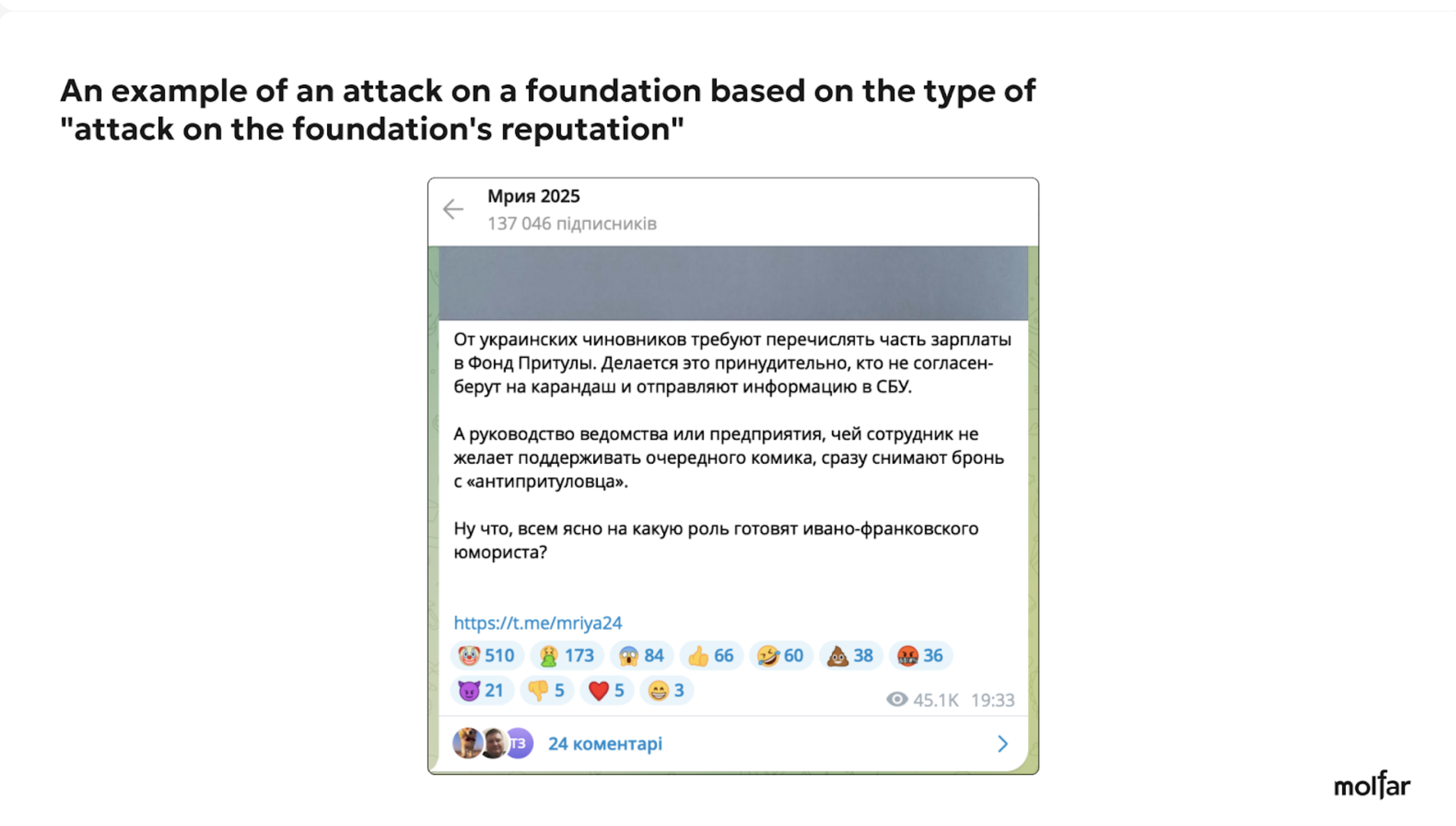

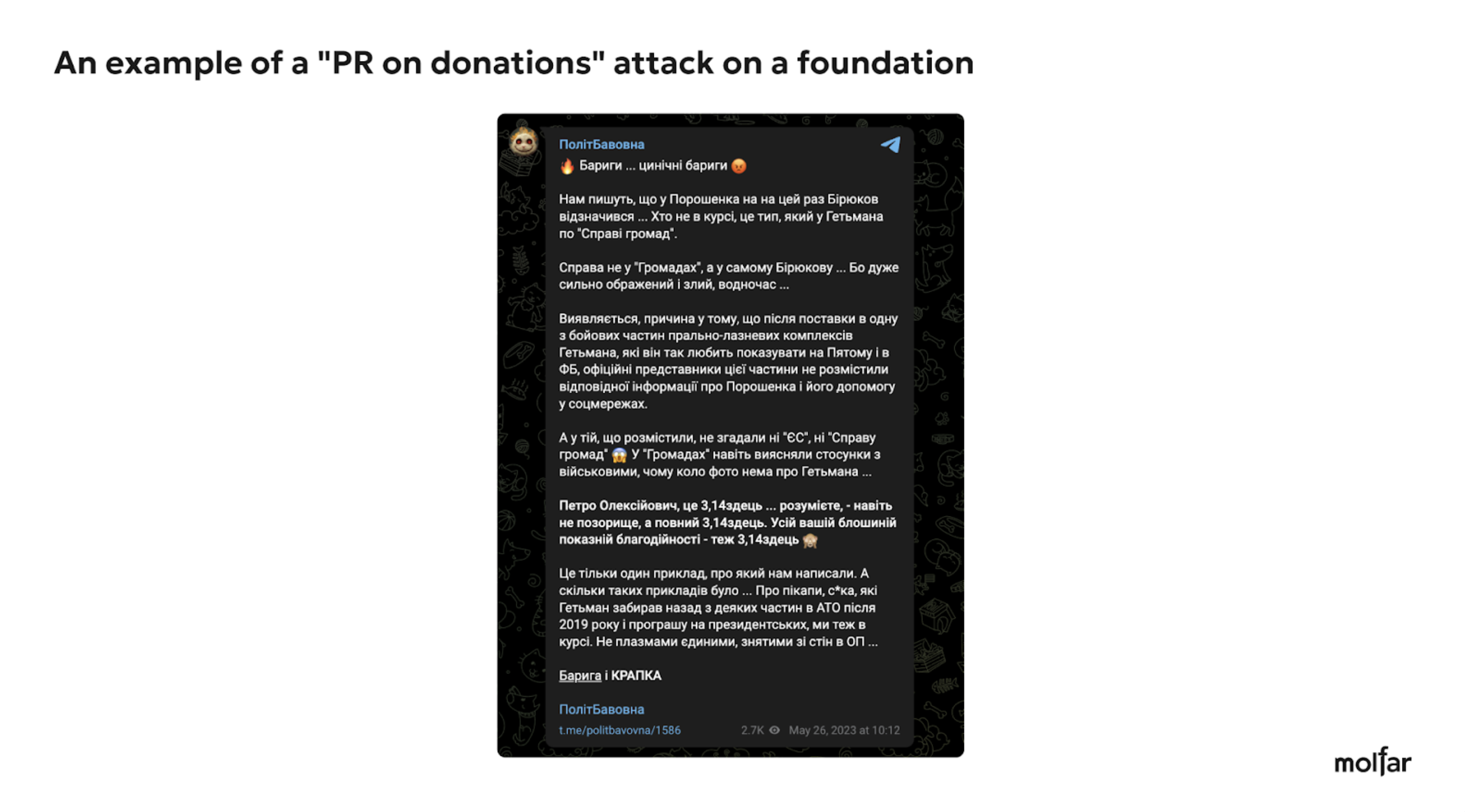

— attacks on the Foundation activities and its reputation. According to Molfar research, this type of attack is used throughout the Foundation activities, not only during key fundraising campaigns or events. Analyzing the conditional and probable initiators of attacks on their commitment to the structures, such an attack is mainly used by Russian propaganda (as confirmed by an article by Radio Svoboda media on September 16, 2024) to spread fakes and reduce the trust of Ukrainians Foundations in general. Also, attacks on the Foundation reputation could be caused by competition between Foundations and differences in political vectors. For example, the attack on the Petro Poroshenko Foundation was related to the 5th president accusation of PR in the war.

Molfar analysts have highlighted three conditional “slogans” that become a cross-cutting thread for criticizing and discrediting funds.

Foundation | The most widespread types of attacks by narrative* | Number of posts and articles with these narratives |

United24 | Stealing money. | 77 |

Attack on Mykhailo Fedorov (Vice Prime Minister for Innovation, Education, Science and Technology Development, Minister of Digital Transformation of Ukraine). | 27 | |

Attack on Zelenskyy. | 16 | |

Come Back Alive | Personal discrediting of Taras Chmut (Director of the Foundation). | 18 |

Stealing money. | 16 | |

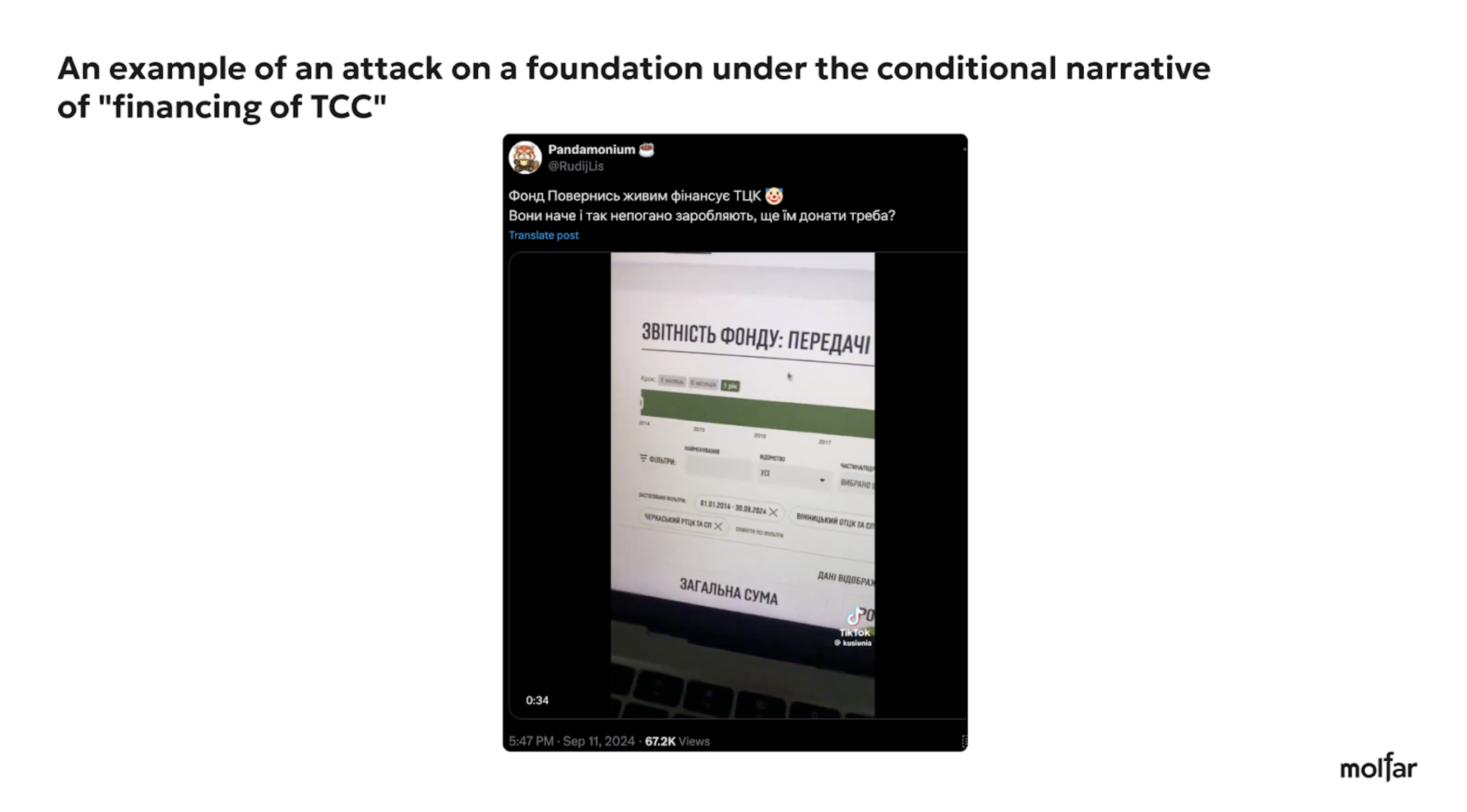

Conflict of interest (the Come Back Alive Foundation finances the TCC). | 10 | |

Serhiy Prytula Foundation | Stealing money. | 58 |

Personal discrediting of Serhiy Prytula (the director of the foundation). | 29 | |

Attack on the Foundation reputation. | 14 | |

Petro Poroshenko Foundations | Misuse of funds. | 13 |

PR on donations. | 7 | |

PR in the war. | 6 | |

KSE Foundation | Attack on Tymofiy Mylovanov (President of KSE). | 4 |

Attack on KSE (Kyiv School of Economics). | 2 | |

Wasteful spending of money. | 1 |

* several types of attacks could be used in one attack, for example, accusations against Fedorov and Zelenskyy at the same time and slogans related to PR or stealing money.

As we can see, the main goal of the attacks is to discredit the Foundations by any means necessary to prevent Ukrainians and businesses from donating to them. Thus, the Foundations could not send a large amount of aid to the frontline.

Detailed analytics of attacks on funds

Come Back Alive

Come Back Alive is a charity Foundation helping the army since 2014. Taras Chmut is the director of the Foundation. As of 2024, the Foundation is engaged in military and veteran projects. According to Forbes and the Foundation website, as of 2024, Come Back Alive has raised UAH 12 billion for the Ukrainian defense forces.

The analysis shows that the Come Back Alive Foundation is being attacked by individuals or bots who may be affiliated with Russian entities and, possibly, the European Solidarity party.

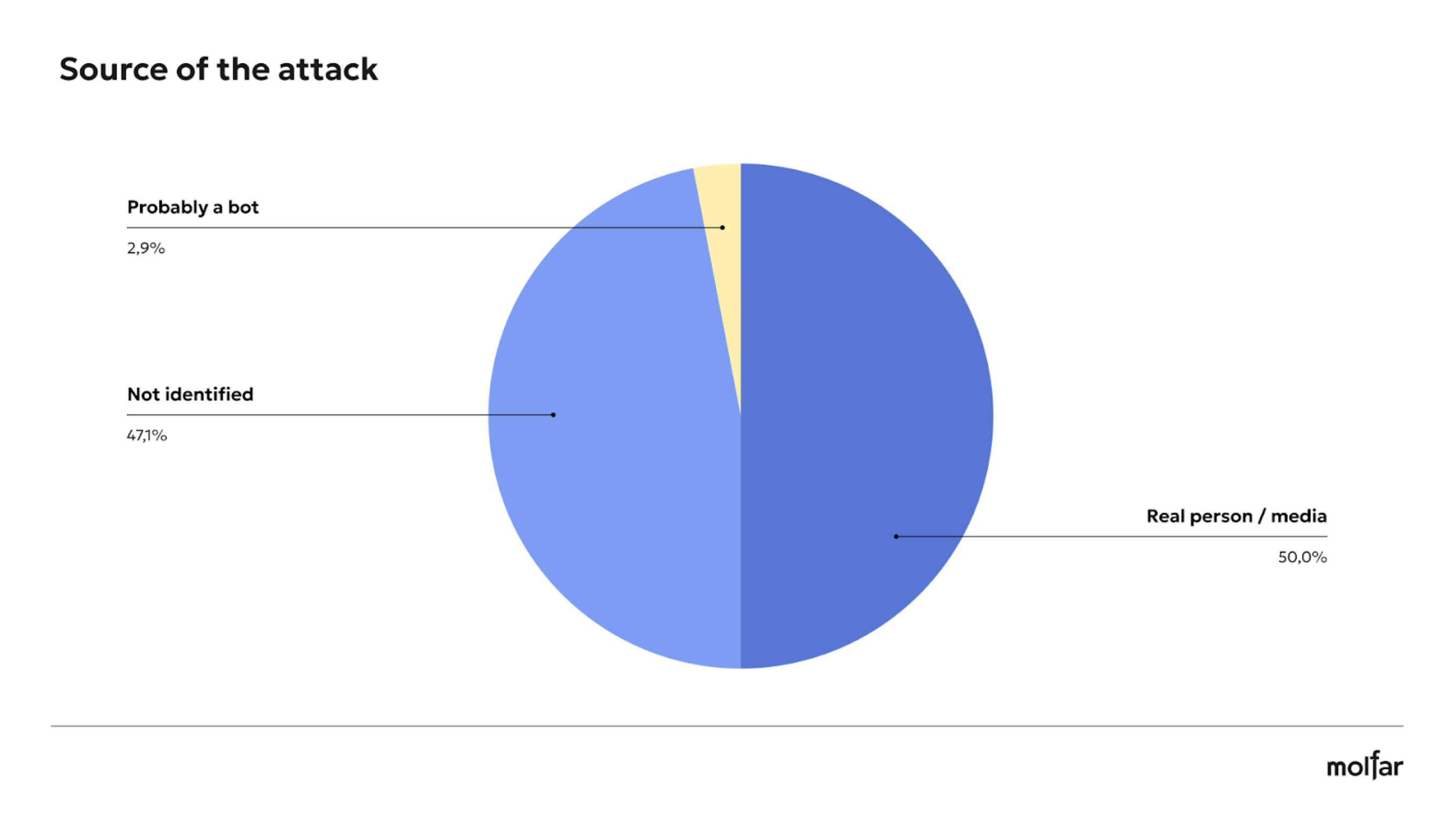

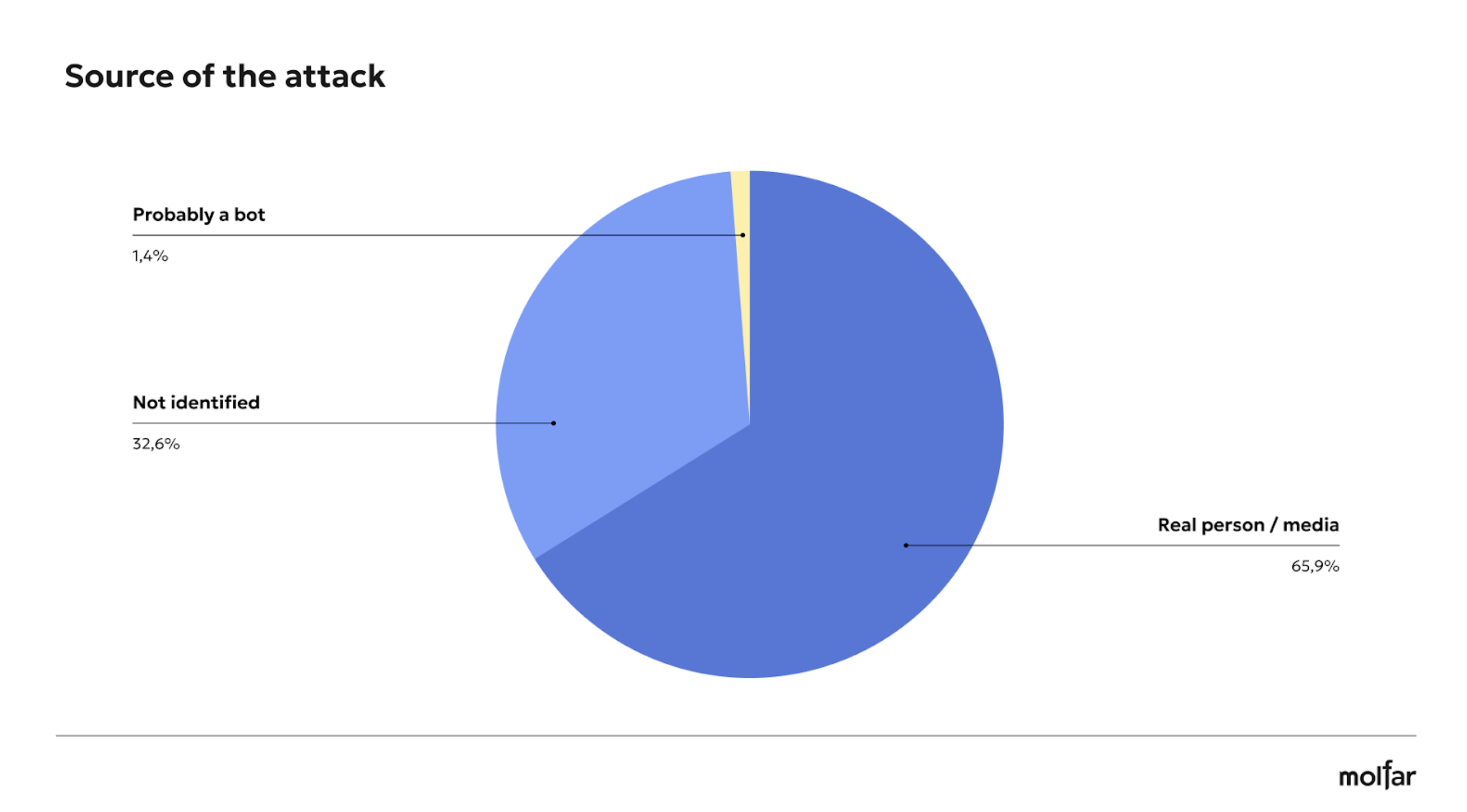

During the analysis of attacks on the Come Back Alive Foundation, publications in social networks and the media were analyzed — 68 attacks in social networks were identified, 34 of which were made by a real person or media, 32 by unidentified sources, and 2 publications were probably by bots.

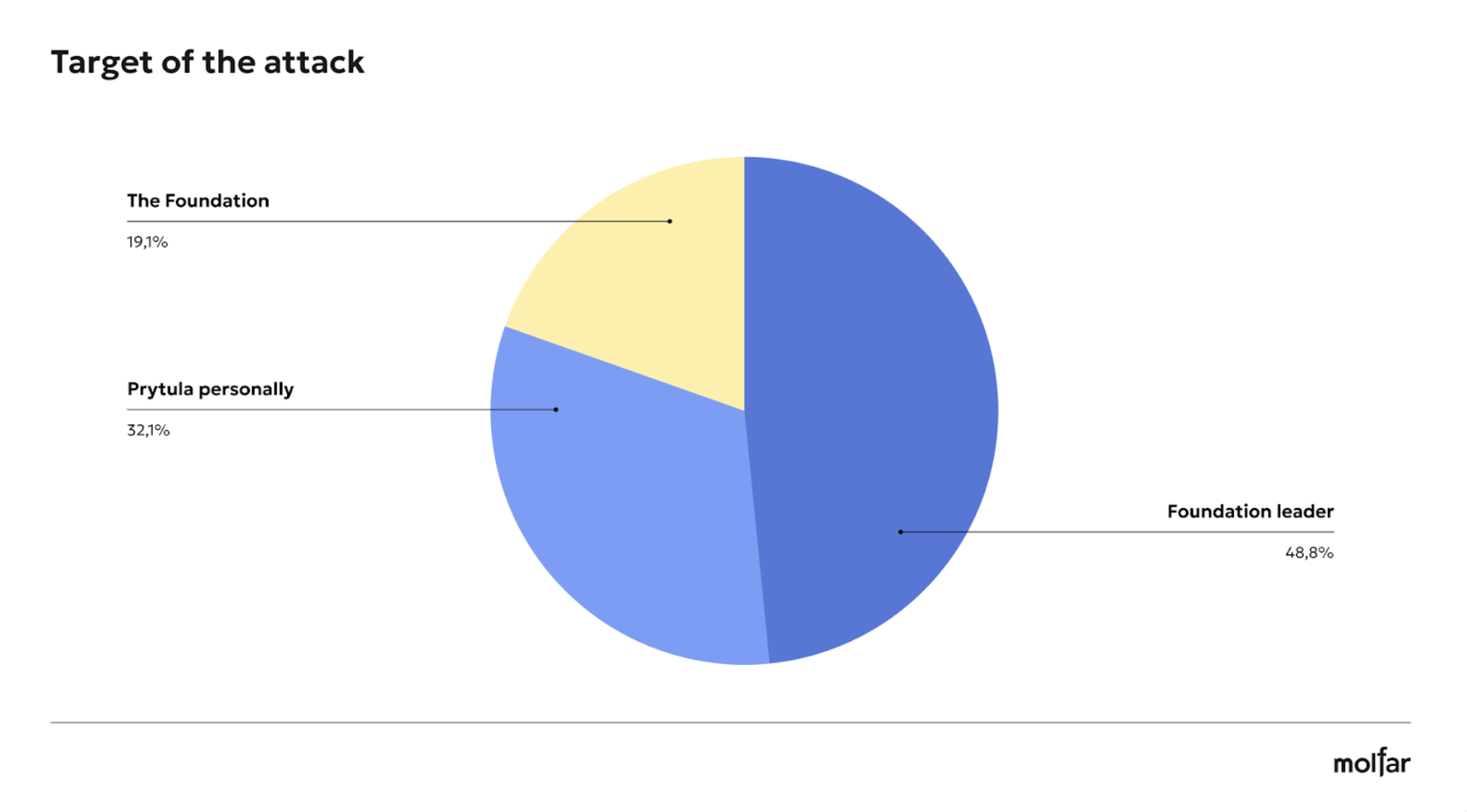

Most of them were aimed at discrediting Taras Chmut personally. There were 39 attacks, which often concerned his public statements on the Ukrainian defense industry, accusing him of being connected to the government or incompetence. A smaller number of attacks targeted the Foundation and Taras Chmut (14 cases), with the most common narratives being that the Foundation and Chmut were about stealing funds. There were 15 attacks on the Foundation only. Most of the attacks were related to the Foundation funding of the Territorial Centre for Recruitment and Social Support.

Three examples of attacks on the Foundation under the most common narratives and slogans (personal discredit of Chmut, embezzlement of money, conflict of interest (allegedly, the Come Back Alive Foundation finances the TCC).

Only 22.1% of the attacks on social media were aimed exclusively at the Foundation. In comparison, the majority (57.4%) were personally directed at Taras Chmut. The attacks intensified after Chmutʼs statements in interviews about the army or after other significant events (for example, after his appointment as a member of the supervisory board of the Defence Procurement Agency).

In response to an inquiry, the Come Back Alive Foundation said that the Foundation team had noticed a trend when a post quickly gains popularity online, attacks (complaints about content, invasion of bots in comments, or even temporary automatic post deletion) may soon follow. Also, after interviews with the Foundationrepresentative or after the announcements of new fundraisers, bot activity increases sharply, the Foundation adds. However, they noted that such actions are predictable and have a pattern.

The Foundationonʼs team also adds that there is a tendency to create fake personal accounts on Instagram/Facebook the Foundation members working with the public and to raise funds under these accounts. However, after an automated setup, the team detects the fake activity and tracks it quickly, the Come Back Alive press office says.

The Come Back Alive team is confident that the attacks are directly related to activities that damage the Foundation reputation and credibility among people. However, the Foundation is convinced that these attempts do not affect the team work, as they have an active community that often understands where bots are active and helps to fight disinformation.

Serhiy Prytula Foundation

Serhiy Prytula Foundation is a charitable Foundation that works in “Helping the Army” and “Humanitarian Aid”. It was established in 2020. Serhiy Prytula is the founder, and Andriy Shuvalov is the director.

As a result of the analysis, we can see that Serhiy Prytula charitable Foundation is being attacked by persons or bots that may be affiliated with Russian structures and, possibly, the European Solidarity party, and attacks that are affiliated with unidentified structures.

The research showed that the attacks usually occur not during the fundraising campaign or projects themselves, but after they have ended, meaning that they are aimed not as much at sabotaging the fundraising campaigns within specific projects but at the Foundation and its associate, or at damaging trust in general. In such cases, a public event often triggers the information.

Analyzing the sources of attacks on Serhiy PrytulaFoundation, Molfar found that the largest number of attacks—64.5%—were spread in TG, while FB was used in almost 13% of attacks. Media and YouTube spread attacks on the Foundation in 12.3% of cases, and TW and other networks were used for 8.7% of posts.

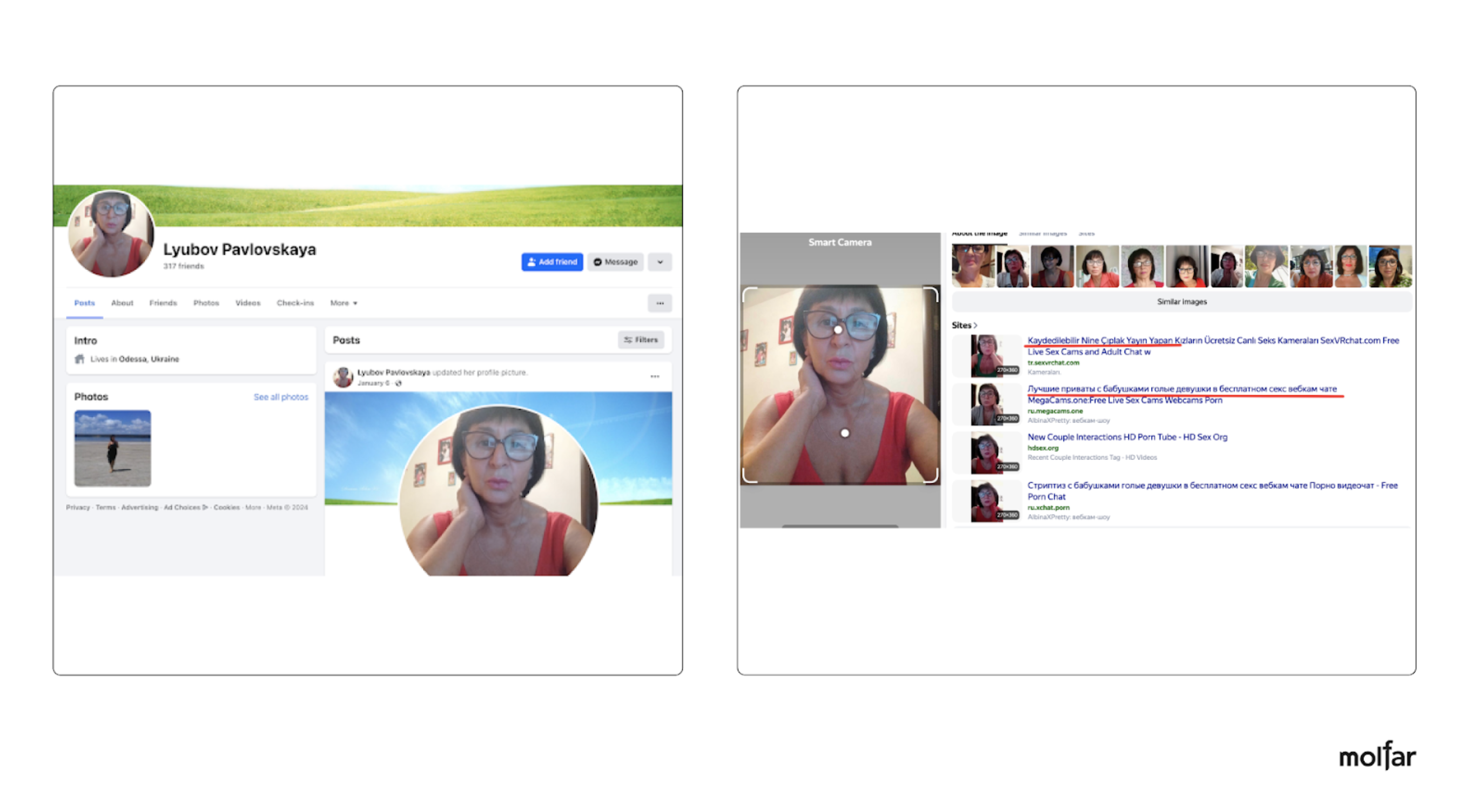

Even though the percentage of bots as an attack source is not enormous, it is still possible to clearly track and analyze the artificiality of the attack. For example, the FB user Lyubov Pavlovskaya is probably a bot. This account was published as a comment on other posts. Why are there reasons to call this account a bot? When Molfar analysts checked her account photo, it turned out that this photo was used on numerous other adult websites.

A collage with screenshots showing an example of a bot and the result of searching for a person by a photo from a profile of the commenter

Here are examples of three attacks on the Foundation under the most common narratives and slogans (“stealing money,” “attacking the Foundation reputation,” and “attacking an associated person”).

Most of the attacks targeted Serhiy Prytula as a person and a volunteer. The frequency of the attacks often does not depend on the projects of the Foundation — the authors of the attacks usually choose any news story and involve the Foundation or Serhiy Prytula personally in a negative context. Also, in their messages, the authors of negative posts and comments do not address the Foundation but Serhiy Prytula personally, making him responsible for all the actions of the Foundation.

A separate topic of attack is the alleged purchase of 3 apartments by Serhiy Prytula during the full-scale war. Although this property was repurchased in 2020, at the zero phase of building, as shown by the documents published by Prytula. According to the Foundation, this attack aims to raise doubts about the Foundation honesty and reduce donations.

Despite regular statements by the Foundation that its operational activities (including salaries) are financed from a separate account and donations to the “military” account are used to help the army, attacks continue to be posted online with the narrative that 20% is allegedly allocated for personal needs.

The press office of the Foundation adds that some attacks were related to buying the ICEYE satellite. Fake news claimed that the satellite “does not work” or that the Foundation had overpaid for the technology. Even though the satellite is used for the needs of the Ukrainian army and helps to collect intelligence, the information attacks tried to destroy trust in this big project.

The Foundation is working to moderate comments on social media, especially during a large fundraising campaign or after important interviews. The team monitors spam and fake news and removes harmful comments. It also works to respond quickly to bot attacks and cooperate with social media administrations, although this requires significant resources.

It was also found that TikTok (226 negative comments) and YouTube (105 negative comments) had perhaps the biggest number of attacks against the Foundation.

In response to a request to the Foundation, the editors of the Molfar website replied that the main goals of the attacks, in their opinion, are to reduce the amount of financial support received for the needs of the army, undermine confidence in volunteers as an important pillar of Ukrainian society, and discredit Ukraine in the eyes of the international community by creating a false impression of the ineffectiveness of public initiatives. For example, according to the press office of the Foundation, during the “Revenge Swarm 2.0” fundraising campaign launch, the team worked until 5 am to counter spam and disinformation, which diverted resources from the Foundation main task of attracting donors. And during the “Nightmare Mega Campaign”, META removed 80% of the content due to massive complaints and threatened to block the page, significantly reducing the reach.

United24

United24 is the official Ukrainian fundraising platform launched at the initiative of President Volodymyr Zelenskyy. The platform does not have its own bank accounts but uses the accounts of several Ukrainian ministries. It was created mainly to engage international donors for humanitarian needs but also has military-related projects. They invite Ukrainian and foreign public persons to promote the fundraising. They created their own media agency to share information about the war in English and other languages.

As a result of the analysis, we can see that the United24 platform is being attacked by persons or bots that may be allegedly affiliated with Russian entities and, possibly, the European Solidarity party. There are also attacks whose affiliation with organizations has not been identified by analysts.

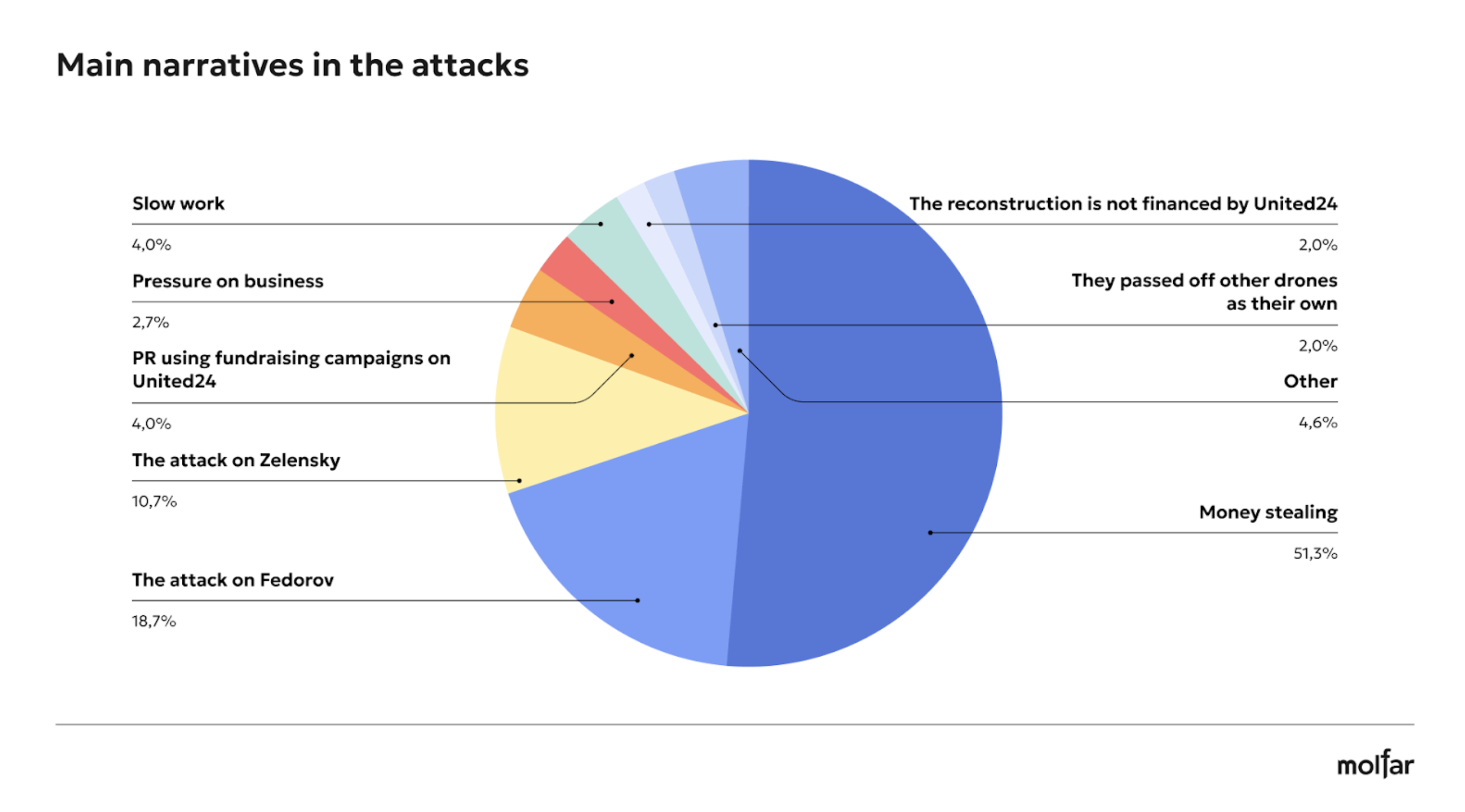

44 (29.4%) cases of attacks were conducted using direct negative information about the organizers (initiators) of the platform itself, its employees, or associated persons. Since the platform does not have a single leader, it is not documented, and the platform is not registered as a separate legal entity, the negative attacks directly targeted the initiator of the platform, President V. Zelenskyy, and the head of several areas of the platform, Vice Prime Minister for Innovation, Education, Science and Technology Development, Minister of Digital Transformation of Ukraine, Mykhailo Fedorov.

(Updated December 23, 2024) In response to the inquiry, the press service of UNITED24 explains that bot activity usually intensifies during significant informational events (such as support from well-known figures with large audiences) or major fundraising campaigns. As an example, they cite the following case: "Recently, a peak was recorded on the eve of the U.S. elections. Bots, primarily with Russian names, began massively following and unfollowing UNITED24’s page on the social network X. Most of these accounts were also following other popular Ukrainian accounts and fundraising initiatives (such as 'Come Back Alive,' Ihor Lachenkov, etc.). This is likely done to disrupt the algorithmic ranking of the account in users’ feeds."

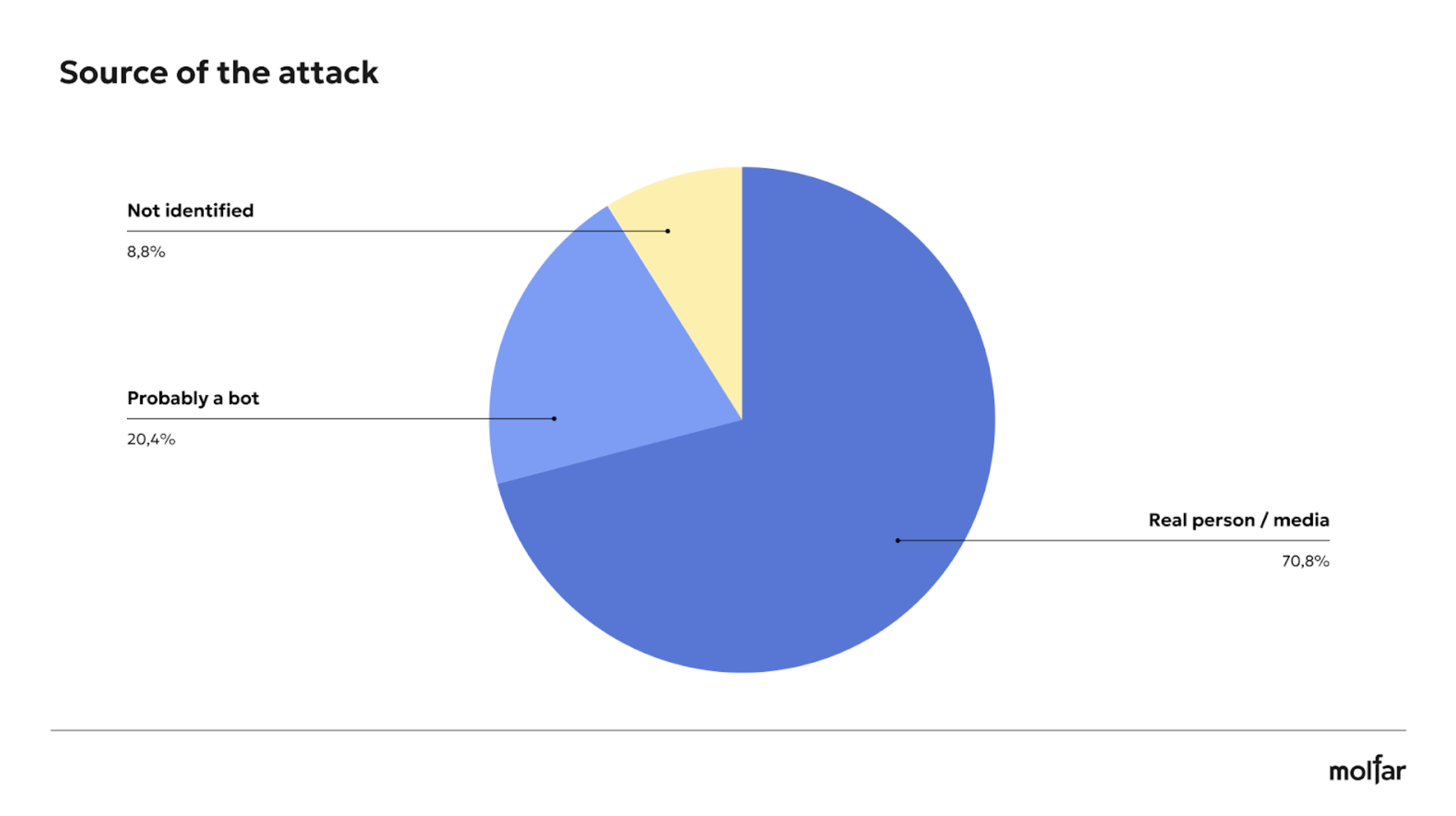

During the preparation of the report, Molfar analysts found 16 unique narratives for 113 attacks, which were also divided into the main “slogans” of the attacks. Some attacks were written in the Russian language. These 113 attacks were made by different sources, some of which were likely bots — 20.4% of all posted news or posts, and another 8.8% were unidentified sources that were difficult to identify as a media outlet or a real person.

Here are examples of three attacks on the Foundation using the most common narratives and slogans (“stealing money,” “attack on Fedorov,” “attack on Zelenskyy”).

30% of the attacks on social media and news were related to the initiators of the platform, while all other attacks were mainly related to other narratives. 51% of the attacks used a narrative related to the Foundation reporting being non-transparent and money theft.

(Updated December 23, 2024) The press service of the fundraising platform notes that on Instagram, bot attacks most often occur after the publication of report videos or joint posts with the President of Ukraine. On Facebook, bots most frequently send messages via Messenger, and this activity is not tied to specific informational events.

An analysis of posts on United24ʼs social media platforms showed that Facebook was the main target of the alleged bots, where the main attacks were carried out by a network of identical bots with the same registration date and similar avatars. Other social networks either did not have negative comments at all (perhaps the comments were moderated by the social media specialists of the Foundation— the editors sent a letter of request for information but had not yet received a reply at the time of publication) or had posts closed to comments, or had a few negative comments.

(Updated December 23, 2024) In response to our inquiry, the United24 team confirmed that they are working on comment moderation: "We delete negative comments containing Russian propaganda narratives and ban their authors," United24 stated, adding that this is due to bots attempting to create a negative impression of the event, discredit the platform, or influence its visibility online.

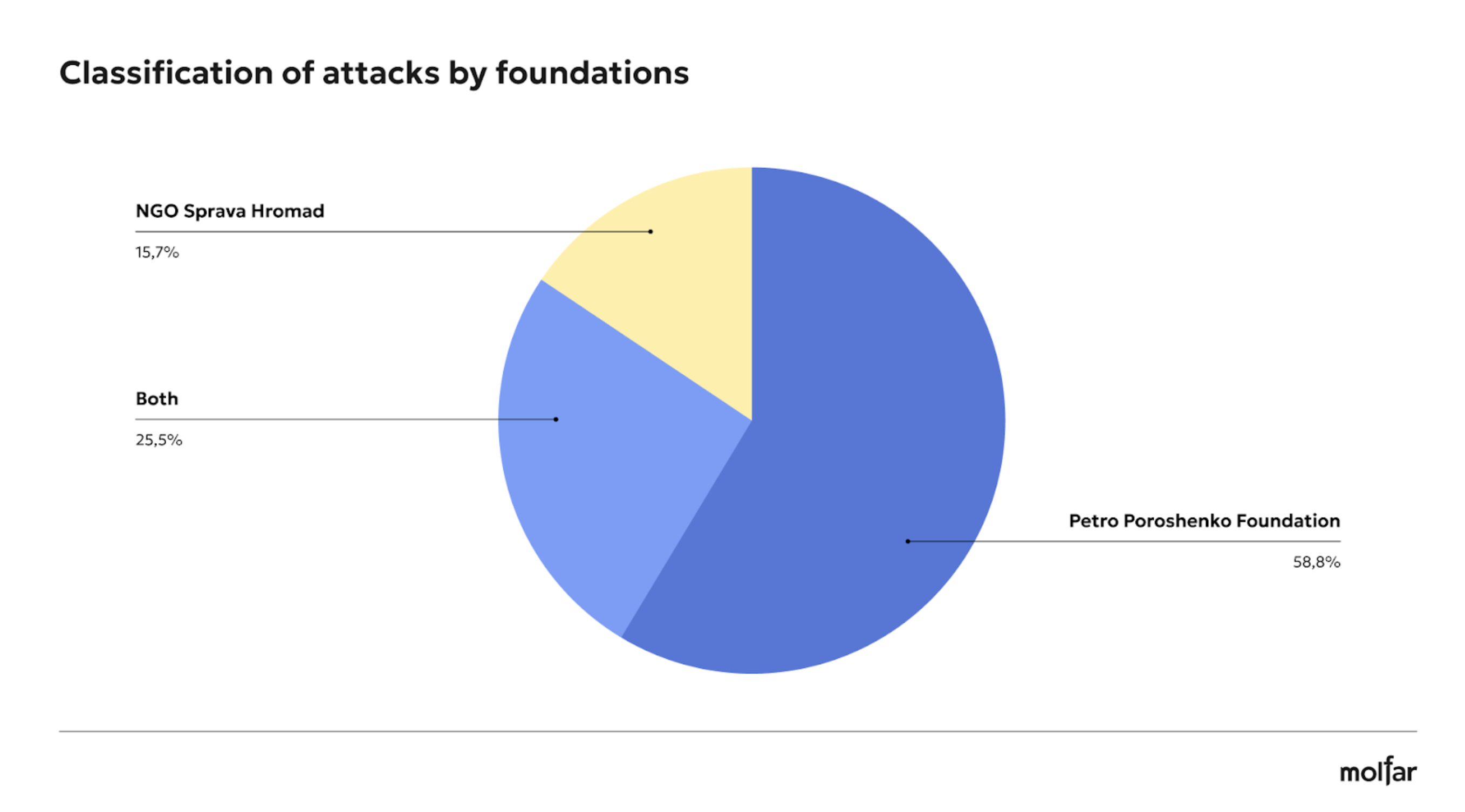

The Petro Poroshenko Foundation and the NGO Solidarna Sprava Hromad

Two charitable Foundations are associated with Petro Poroshenko:

— The Petro Poroshenko Foundation (FB): according to Poroshenko own statements, tFoundation does not accept donations from citizens and is funded by Poroshenko family. TFoundation is engaged not only in helping the military but also in civilian projects;

— NGO Sprava Hromad(legal name — NGO Solidarna Sprava Hromad) (Inst, FB, TW, TG): Foundation that collects donations and spends them only on purchasing aid. The Foundation is focused on helping the military, with projects divided into groups: logistics, welfare, equipment, intelligence, combat, and other projects.

In the report, Molfar analysts didnʼt collect direct attacks against Petro Poroshenko in which the name of the platform or direct projects of the Foundations was not mentioned. Since Poroshenko is a politician, 5th president of Ukraine, and current MP, all the negative attacks directed at him alone would be related to his political activity, not to tFoundation direct activities. That is why Molfar analysis did not take such attacks into account.

The analysis shows that the Petro Poroshenko charitable Foundation and the NGO Solidarna Sprava Hromad are being attacked by persons or bots who may be affiliated with Russian entities, possibly with the Sluha Narodu party, and entities whose affiliation has not been identified by Molfar analysts.

When analyzing social media and news sites, the report authors found 18 unique narratives for 51 attacks. Some attacks were written in Russian, and 13 of them were shared by other network members with similar texts.

30 attacks (58.8%) were aimed at the Poroshenko Foundation precisely because the name of the Foundation includes the name of a politician, and thus there is an association by name. At the same time, 13 attacks (25.5%) were made on both Foundations, and 8 attacks were detected on the Sprava Hromad Foundation, which is almost 16%.

Here are examples of three attacks on the Foundation under the most common narratives and slogans (misuse of funds, PR on donations, and PR on war).

The analysis shows that Petro Poroshenko is the target of the attack, even when the attacks are directed at the Foundations, to discredit Poroshenko as a politician/public figure. This is confirmed by the number of comments under posts about helping the defense forces on the official social media pages of charitable Foundations associated with Poroshenko and under similar posts on his page. The editors of the Molfar website sent a request for information, but at the time of publication of this article, we have not received a reply yet.

25.5% of the identified and analyzed attacks on the Foundation in social media and news were related to narratives about the misuse of money. The sum of attacks with the narratives of “PR in the war” and “PR on donations” also amounts to 25.5% (11.8% and 13.7%, respectively). Molfar analysis of social media showed that Telegram is the main source of attacks on Poroshenko-related Foundations, accounting for 76.5% of negative posts.

KSE Foundation

KSE (Kyiv School of Economics) is a world-class private higher education institution founded in 1996 by the Economic Education and Research Consortium (EERC) and the Eurasia Foundation. The KSE Foundation was established in 2007 as a subsidiary of a non-profit corporation in the United States and has been focused on providing scholarships for talented young people since its inception. Tymofiy Milovanov is the President of KSE, and Svitlana Denysenko is the Director of the KSE Foundation.

Thus, we can see that the Foundation itself is practically not attacked; most of the negative comments are related to an associated person. The editors of the Molfar website sent a request for information, but at the time of publication of this article, we have not received a reply yet. This partially proves the hypothesis of the authors, that the attacks are not coming from individual articles or posts but rather from comments under the posts of the Foundation or its associates. In the case of the analysis of attacks on the KSE Foundation, the infographic with diagrams was not included in the text because of the lack of attack cases for a detailed analysis.

Expand details about methodology

Notes and research methodology

Notes. Based on the data collected during the investigation, Molfar analysts categorized the attacks by type of supporters. All supporters are indicated with the prefix “likely” and are the sole opinion of the authors of this report. In some cases, direct evidence of supporters of structures or individuals through attacks on the Foundation was found.

The term “attack” in the analysis refers to unjustified emotional criticism, personal insults, negative comments, or individual posts on social media and articles. Attacks on social media were collected by keywords (names of Foundations, their directors, or flagship projects), i.e., searches on social media and news sites. Some social networks, such as Facebook (FB), have their own page indexing. Sometimes, when searching for posts on FB, a post may not appear in the general search, but if you search on the author page using the same keys, this post will be displayed. This may be due to FBʼs internal rules for ranking search queries.

Molfar OSINT specialists analyzed all attacks that took place from February 20, 2022, to October 2024 (and another one in November 2024) against the five largest Ukrainian charities that raise funds for the needs of the Ukrainian security and defense forces. According to Forbes, the largest Foundations are those that raise the largest amount for the needs of the military each year, and they are: United24 platform, Come Back Alive, Serhiy Prytula Foundation, KSE Foundation, and Petro Poroshenko Foundation + NGO Solidarna Sprava Hromad.

The results of the Kantar survey for December 2022 and April 2023 show that the Come Back Alive and Serhiy Prytula Foundations are among the TOP 5 Foundations Ukrainians trust with their charitable contributions. It is also worth noting that the Petro PoroshenFoundation and the NGO ʼSolidarity Cause of Communitiesʼ use joint reporting published on social media. During the investigation, analysts could not find exactly how the funds raised separately in 2022 and 2023 were distributed by boFoundations, so the total amount of the tFoundations for 2 years was used for the analysis.

According to data from Youcontrol, NGOs and Foundations do not disclose all fees income in their official reports. The amount of income in Youcontrol and on the official pages of Foundations may differ due to income from different sources (cryptocurrency, PayPal) and differences in exchange rates. Therefore, the analysts relied on the statements of the funds published on their official websites or social media.

Methodology

Preparation of raw data for analysis

Selection of research objects. Five charitable Foundations, which are highly ranked in fundraising to support the Security and Defense Forces, were selected to analyze the attacks. The analysis includes an analysis of attacks from the beginning of the full-scale invasion to October 2024 and one attack in November of the same year.

Data collection

Molfar analysts collected and organized the information in a tabular database using a single template for each charitable Foundation. The database included negative articles and posts with sources identified by the name of the Foundations or their projects, as well as by the name of the director of the Foundation or associate coverage indicators (views, reactions, shares, comments); channels of dissemination of the same news or post on social media (lists of other sources where similar information was posted);

date and type of source where the post or article was published.

Analysis of information

Sources and publications. To identify the links between supporters and attacks Foundations, Molfar analyzed the following factors:

— Media's connection to stakeholders (supporters of the organizations): Molfar analyzed the resources that disseminated materials for the attacks for their allegiance to structures, political forces, or individuals associated with the interested parties (Ukrainian political forces, Russian authorities, Russian STOP-centers (centers for information and psychological operations));

— analysis of the context of publications: analysts evaluated comments or text that contained a contrast between CFs or individuals, presenting some as “better than the competitor Foundation”;

— ties to the Russian Federation: the authors of the analysis checked articles and contributors for ties to Russia, analyzed possible public references to their involvement in Russian special services or in promoting pro-Russian narratives;

analysis of the authors of publication history and social media accounts: they analyzed accounts that promote similar narratives to understand their support for political forces or Russia.

Comments under official publications of charitable Foundations. Molfar reviewed the latest publications in the official Foundation channels to see if there were any negative comments under the posts. In addition, Molfar collected data from open sources according to the following criteria:

— 5 most recent publications related to assistance to the security and defense forces were analyzed;

— comments left by the authors of the publications were taken into account as neutral;

— an analysis of negative comments and their authors was conducted. Some authors were recognized as fake accounts (or bots) because their profile photos were stolen from other accounts, and/or they made many reposts in one day, had fake names, and/or their profiles were registered to Russian numbers. Some accounts could not be identified as bots or as real people due to the lack of sufficient information (profiles were closed, photos were not used on other resources, and the accounts themselves were registered to unidentified registration data).

Research of social media activity of popular accounts. The analysts checked accounts of populists, propagandists, opinion leaders, politically affiliated persons with various parties, and accounts that may have a pro-Russian audience to identify attempts to promote negative information about the Foundations.

We used automated tools to monitor information. The analysis involved automated services that collected articles, comments, and feedback on the Foundations activities to identify negative narratives and their targeted areas of attack in the media and social networks as effectively as possible.

Evaluation of the collected data. Molfar analysts separated constructive criticism from direct attacks by competitors or other involved accounts in the comments section of social media and media. The specialists analyzed the narratives, compared accusations with each other in the found comments, and selected unified narratives and types of attacks on the funds to generate further data for comparing attacks.

This selection was necessary to identify a minimum number of conditionally unified narratives and apply them to Foundations. This ensured a systematic approach to classifying attacks Foundations by type.

Analysis of attacks in the context of “slogans” and narratives. Molfar worked on:

— analyzing negative articles and comments for the presence of the same consistent expressions (“slogans”) that are regularly used to discredit the fund;

— identifying the cyclical nature of attacks by dates and dependence on events related to Foundation and its leaders selection of sources that repeatedly used the same “slogans” for attacks. This allowed us to identify resources that spread narratives and possibly cooperated in a joint destructive campaign;

— comparative analysis of the found “slogans” with those used by Russian sources. The findings helped identify narratives that may be of Russian origin or part of information operations aimed at discrediting Ukrainian charitable organizations.

Analysis of attacks on specific projects:

— we identified attacks linked to major Foundation projects or other events. Thanks to this analysis, it is possible to see the dependence of the attack on the activities of tFoundation and constructive criticism or on the threat to the reputation oftFoundation and its leaders;

— we analyzed the methods of discrediting to identify the most common approaches: aggressive articles and publications in the media, as well as negative comments under the posts Foundations or their directors (associates). Thus, we identified which channels were more often used to spread negative narratives;

— we analyzed the use of sources (opinion leaders and media) that repeatedly participate in discrediting. The discovery of such patterns allowed us to identify the main channels for spreading attacks and their role in shaping public opinion about the activities of the Charitable Foundation.

Identification of the sources most involved in the attacks.

— Molfar found a series of posts with similar accusations or similar posts in a short period of time on the pages that spread the narratives using keywords for all Foundations;

— found and identified authors who actively spread negative narratives;

Analyzed raw data to identify the most engaged sources.

Checking the artificiality of attacks. Molfar analyzed the posts/articles and comments according to the following criteria:

— whether the same publications/bloggers are involved in the attacks (which may indicate the paid-for nature of the posts and the connection between similar publics) whether the materials in different sources are presented in the same way when attacking the same topic (the exact phrases and statements);

— whether bots and fake accounts were involved in the attacks;

— whether the attacks were carried out by reposting only or by copying posts with minor changes;

— where publications criticizing Foundations first appeared (in pro-Russian or pro-Ukrainian resources).

At the beginning of the full-scale Russian invasion, Ukrainians showed themselves as a united and freedom-loving people. Whether it was meetings opened by volunteers and closed in minutes, high-profile communication campaigns, or creative approaches to drawing attention to fundraising, it all worked together. Over time, Ukrainians are tired of the war, the unpopular mobilization campaign, the Russian offensive, fears that the newly elected American president will cut military aid to Ukraine, blackouts, and Russian advancement all contribute to a declining level of Ukrainian involvement in raising money for the army. And the needs are growing. Volunteers need to work three times harder, creatives need to generate even more creativity that will stand out, and opinion leaders need to be even louder on social media when some of them hide sensitive content. At the same time, a campaign to devalue the work of Foundations, incite hatred, and foster distrust of their leaders or ambassadors is going on daily on the same social media.

If you have any questions or want to learn more about the research, please get in touch with us at [email protected].